D Supp. materials for ch. 3

D.1 Additional analyses: Exp. 1

D.1.1 Response time, confidence, and metacognitive sensitivity differences

In detection, participants were generally slower to deliver ‘no’ responses compared to ‘yes’ responses (median difference: 85.37 ms, \(t(9) = -3.46\), \(p = .007\) for a t-test on the log-transformed response times; see Fig. 3.3, third row). No significant difference in response times was observed for the discrimination task (median difference: 6.16 ms, \(t(9) = -0.43\), \(p = .676\)).

Confidence in detection was generally higher than in discrimination (\(M_d = 0.06\), 95% CI \([0.01\), \(0.12]\), \(t(9) = 2.49\), \(p = .035\); see Fig. 3.3, fourth row). Within detection, confidence in ‘yes’ responses was generally higher than confidence in ‘no’ responses (\(M = 0.08\), 95% CI \([0.03\), \(0.13]\), \(t(9) = 3.49\), \(p = .007\)). No difference in average confidence levels was found between the two discrimination responses (\(M = 0.02\), 95% CI \([-0.03\), \(0.06]\), \(t(9) = 0.91\), \(p = .384\)).

Following Meuwese, Loon, Lamme, & Fahrenfort (2014), we extracted response-conditional type-2 ROC (rc-ROC) curves for the two tasks. Unlike traditional type-I ROC curves that provide a summary of subjects’ ability to distinguish between two external world states, type 2 ROC curves represent their ability to track the accuracy of their own responses. The area under the response-conditional ROC curve (auROC2) is a measure of metacognitive sensitivity, with higher values corresponding to more accurate metacognitive monitoring.

Mean response-conditional ROC curves for the two responses in the discrimination task closely matched (\(M = 0.00\), 95% CI \([-0.05\), \(0.05]\), \(t(9) = 0.13\), \(p = .900\)), indicating that on average, participants had similar metacognitive insight into the accuracy of the two discrimination responses. In contrast, auROC2 estimates for ‘yes’ responses were significantly higher than for ‘no’ responses, indicating a metacognitive asymmetry between the two detection responses (group difference in auROC2: \(M = 0.11\), 95% CI \([0.03\), \(0.18]\), \(t(9) = 3.28\), \(p = .010\)).

D.1.2 zROC curves

An asymmetry in metacognitive sensitivity for ‘yes’ and ‘no’ responses is predicted by unequal-variance Signal Detection Theory (uvSDT). Specifically, if the signal distribution is wider than the noise distribution, the overlap between the distributions will be more pronounced for misses and correct rejections than for hits and false alarms, making metacognitive judgments for ‘no’ responses objectively more difficult. Unequal-variance SDT predicts that plotting the type-1 ROC curve in z-space (taking the inverse cumulative distribution of the confidence rating histogram) will result in a straight line with a slope equal to \(\frac{\sigma_{noise}}{\sigma_{signal}}\). Because the variance of the signal distribution is higher than that of the noise distribution, zROC slopes are typically shallow, with slopes below 1.

We used linear regression to estimate the slope of the zROC curve. To control for underestimation of the slope due to regression to the mean (Wickens, 2002, p. 56), we fitted two regression models for the task data of each participant: one predicting \(Z(h)\) based on \(Z(f)\) (slope \(s_1\)) and one predicting \(Z(f)\) based on \(Z(h)\) (slope \(s_2\)). We then used \(\frac{log(s_1)-log(s2)}{2}\) as a bias-free measure of the zROC slope. In equal-variance SDT, this value is predicted to be 0, corresponding to a slope of 1.

Indeed, slopes were generally shallow for detection zROC curves (as predicted by an unequal-variance SDT model; \(M = -0.15\), 95% CI \([-0.27\), \(-0.04]\), \(t(9) = -2.95\), \(p = .016\)), and not significantly different from 1 for discrimination zROC curves (as predicted by equal-variance SDT; \(M = 0.00\), 95% CI \([-0.09\), \(0.10]\), \(t(9) = 0.07\), \(p = .946\)).

These results support a difference in the variance-structure of the representation of signal and noise, such that the representation of signal is more varied across trials. However, it is still possible that some of the metacognitive asymmetry in detection (the difference in auROC between ‘yes’ and ‘no’ responses) reflects additional higher-order processes that cannot be captured by a first-order signal-detection model. If this was the case, zROC curves for detection should not only be more shallow, but also less linear than for discrimination, reflecting poorer fit of the signal-detection model to detection. In order to test if this was the case, we compared the subject-wise \(R^2\) values for the detection and discrimination zROC regression lines. \(R^2\) values reflect the goodness of fit of a linear model to the data. These values were similar for the two tasks (\(M_d = -0.01\), 95% CI \([-0.03\), \(0.01]\), \(t(9) = -0.91\), \(p = .385\)), suggesting that a first-order SDT model accounted equally well for the two tasks.

D.1.3 Confidence response-time alignment

Following our pre-registered analysis plan, we extracted a Spearman correlation coefficient between confidence and response times separately for the two tasks and four responses. We find a negative correlation in all four cases (discrimination responses: -0.40 and -0.39, detection ‘yes’: -0.41, detection ‘no’: -0.33). As hypothesized, this negative correlation was significantly attenuated in detection ‘no’ responses compared to detection ‘yes’ responses (tested with a one-tailed t-test: \(t(9) = -1.97\), \(p = .040\)). The difference in correlation strength between detection ‘no’ responses and discrimination responses was only marginally significant (\(t(9) = -1.68\), \(p = .063\)).

D.1.4 Global metacognitive estimates

At the end of each 100-trial block, participants estimated their block-wise accuracy. Mean estimated accuracy was 0.71 for discrimination and 0.69. These figures are close to true correct response rates: 0.74 in discrimination and 0.72 in detection.

A difference of 0.03 between mean accuracy estimates for discrimination and detection was not significant at the group level (\(t(9) = 1.71\), \(p = .121\)).

D.2 Additional analyses: Exp. 2

D.2.1 Response time, confidence, and metacognitive sensitivity differences

Participants were slower to deliver ‘no’ responses compared to ‘yes’ responses (median difference: 77.12 ms, \(t(101) = -6.84\), \(p < .001\) for a t-test on the log-transformed response times; see Fig. 3.7, third row). No significant difference in response times was observed for the discrimination task (median difference: 10.90 ms, \(t(101) = -1.40\), \(p = .165\)).

Confidence in detection was generally lower than in discrimination, consistent with lower accuracy in this task (\(M_d = -0.09\), 95% CI \([-0.11\), \(-0.07]\), \(t(101) = -8.41\), \(p < .001\); see Fig. 3.7, fourth row). Within detection, confidence in ‘yes’ responses was generally higher than confidence in ‘no’ responses (\(M = 0.10\), 95% CI \([0.07\), \(0.12]\), \(t(101) = 8.15\), \(p < .001\)). No difference in average confidence levels was observed between the two discrimination responses (\(M = 0.00\), 95% CI \([-0.02\), \(0.02]\), \(t(101) = -0.03\), \(p = .974\)).

In contrast to the results of Exp. 1, auROC2 for ‘yes’ and ‘no’ responses were not significantly different (group difference in area under the response-conditional curve, AUROC2: \(M = 0.02\), 95% CI \([-0.02\), \(0.06]\), \(t(58) = 1.13\), \(p = .264\); see Fig. 3.7, first and second rows). auROC2s were not significantly different also when controlling for type-1 response and confidence biases (\(M = 0.01\), 95% CI \([-0.03\), \(0.05]\), \(t(58) = 0.59\), \(p = .560\)).

D.2.2 zROC curves

Unlike in Experiment 1, detection zROC slopes were not significantly different from 1 (\(M = -0.04\), 95% CI \([-0.09\), \(0.01]\), \(t(100) = -1.52\), \(p = .131\)), whereas discrimination zROC slopes were significantly shallower than 1 (\(M = -0.14\), 95% CI \([-0.25\), \(-0.02]\), \(t(93) = -2.29\), \(p = .024\)). This unexpected result indicates equal variance for the signal and noise distributions, but higher variance for targets presented on the right than on the left. Furthermore, first-order SDT fitted the data significantly better for the detection task than for the discrimination (difference in \(R^2\) for the two tasks: \(M = 0.15\), 95% CI \([0.12\), \(0.18]\), \(t(93) = 8.85\), \(p < .001\)).

D.3 Additional analyses: Exp. 3

D.3.1 Response time, confidence, and metacognitive sensitivity differences

Participants were also slower to deliver ‘no’ responses compared to ‘yes’ responses (median difference: 71.81 ms, \(t(97) = -6.66\), \(p < .001\) for a t-test on the log-transformed response times; see Fig. 3.11, third row). No significant difference in response times was observed for the discrimination task (median difference: 19.28 ms, \(t(96) = -0.28\), \(p = .781\)).

Confidence in detection was generally lower than in discrimination, consistent with lower accuracy in this task (\(M_d = -0.04\), 95% CI \([-0.06\), \(-0.02]\), \(t(97) = -3.77\), \(p < .001\); see Fig. 3.11, fourth row). Within detection, confidence in ‘yes’ responses was generally higher than confidence in ‘no’ responses (\(M = 0.09\), 95% CI \([0.07\), \(0.11]\), \(t(97) = 8.00\), \(p < .001\)). No difference in average confidence levels was observed between the two discrimination responses (\(M = -0.01\), 95% CI \([-0.03\), \(0.02]\), \(t(97) = -0.65\), \(p = .519\)).

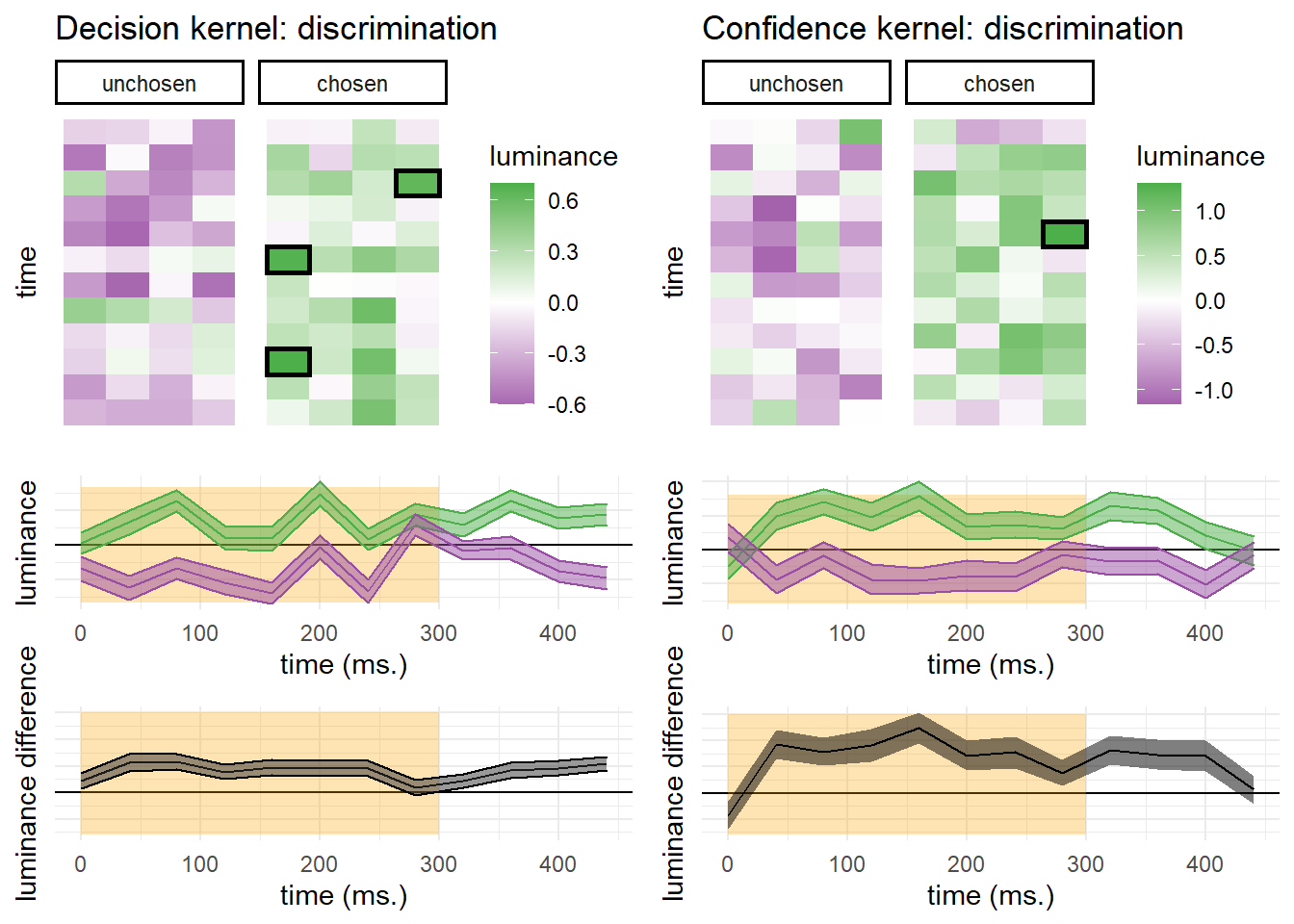

D.3.2 Reverse correlation analysis of standard trials only

In the following, we repeat the reverse correlation analysis for Exp 3. on the subset of trials where luminance was not increased by 2/255.

Discrimination decisions

Discrimination decisions were sensitive to fluctuations in luminance during the first 300 milliseconds of the trial (\(t(97) = 8.47\), \(p < .001\)). We found no evidence for a positive evidence bias in discrimination decisions, even when grouping evidence based on the location of the true signal rather than subjects’ decisions (\(t(93) = -0.23\), \(p = .819\)).

Discrimination confidence

Luminance within the first 300 milliseconds had a significant effect on confidence ratings (\(t(97) = 6.38\), \(p < .001\); see Fig. D.1, right panels). A positive evidence bias in discrimination confidence was not significant in this sample (\(t(97) = 1.42\), \(p = .157\)).

Figure D.1: Decision and confidence discrimination kernels, Experiment 3, standard trials only.

D.4 Pseudo-discrimination analysis

In our pre-registration document (https://osf.io/8u7dk/), we specified our plan for pseudo-discrimination analysis, where we analyze detection ‘signal’ trials as if they were discrimination trials:

In this analysis, we will assume that in the majority of ‘different’ trials, when participants responded ‘yes’ they correctly identified the brighter set. For example, a detection trial in which the brighter set was presented on the right and in which the participant responded ‘yes’ will be treated as a discrimination trial in which the participant responded ‘right.’ Conversely, a trial in which the brighter set was presented on the right and in which the participant responded ‘no’ will be treated as a discrimination trial in which the participant responded ‘left.’ These hypothetical responses will then be submitted to the same reverse correlation analysis described in the previous section confidence kernels.

We subsequently realized that a much simpler approach is to contrast ‘yes’ and ‘no’ responses for the true and opposite direction of motion (or flickering stimuli) in signal trials. This alternative approach does not entail treating ‘no’ responses as the successful detection of a wrong signal. The results of this analysis mostly agreed with the pre-registered pseudo-discrimination analysis. For completeness, we include the pre-registered pseudo-discrimination analysis for both experiments here.

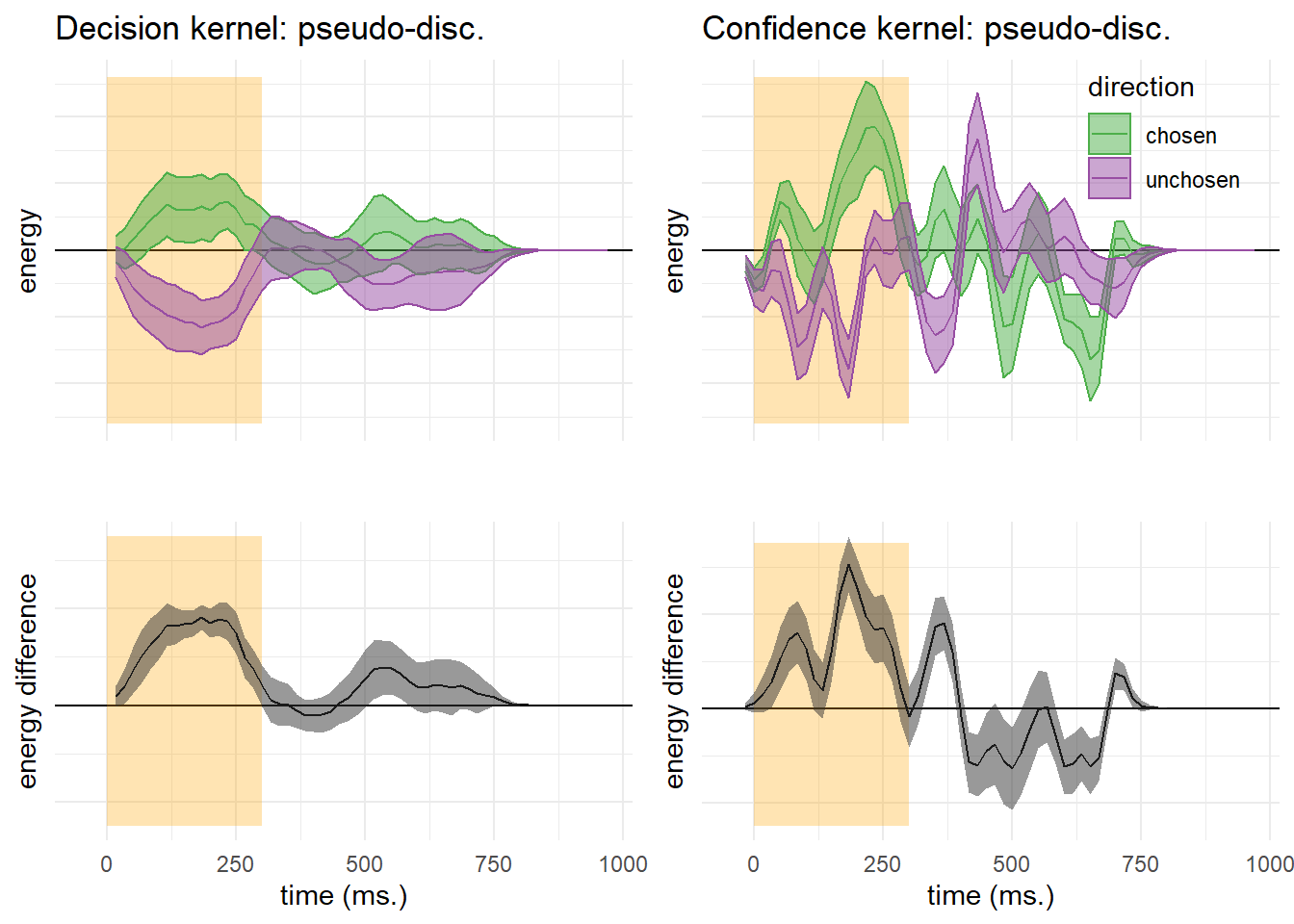

D.4.1 Exp. 1

Figure D.2: Decision and confidence pseudo-discrimination kernels, Experiment 1. Upper left: motion energy in the “chosen” (green) and “unchosen” (purple) direction as a function of time. Bottom left: a subtraction between energy in the “chosen” and “unchosen” directions. Upper right: confidence effects for motion energy in the “chosen” (green) and “unchosen” (purple) directions. Lower right: a subtraction between confidence effects in the “chosen” and “unchosen” directions. Shaded areas represent the the mean +- one standard error. The first 300 milliseconds of the trial are marked in yellow.

Pseudo-discrimination decision kernels were highly similar to discrimination decision kernels. Here also, motion energy during the first 300 milliseconds of the stimulus had a significant effect on decision (\(t(9) = 4.18\), \(p = .002\)) and on decision confidence (\(t(9) = 3.26\), \(p = .010\)). However, unlike discrimination, where motion energy in the chosen direction influenced decision confidence more than motion energy in the unchosen direction, no such bias was observed for detection responses (\(t(9) = 0.20\), \(p = .849\)).

While motion energy during the first 300 milliseconds of the trial significantly affected confidence in ‘yes’ responses (\(t(9) = 5.52\), \(p < .001\)), it had no significant effect on confidence in ‘no’ responses (\(t(9) = -0.09\), \(p = .932\)). However, given that the pseudo-discrimination analysis was performed on signal trials only, confidence kernels for ‘no’ responses were based on fewer trials than confidence kernels for ‘yes’ responses, such that the absence of a significant effect in ‘no’ responses may reflect insufficient statistical power to detect one.

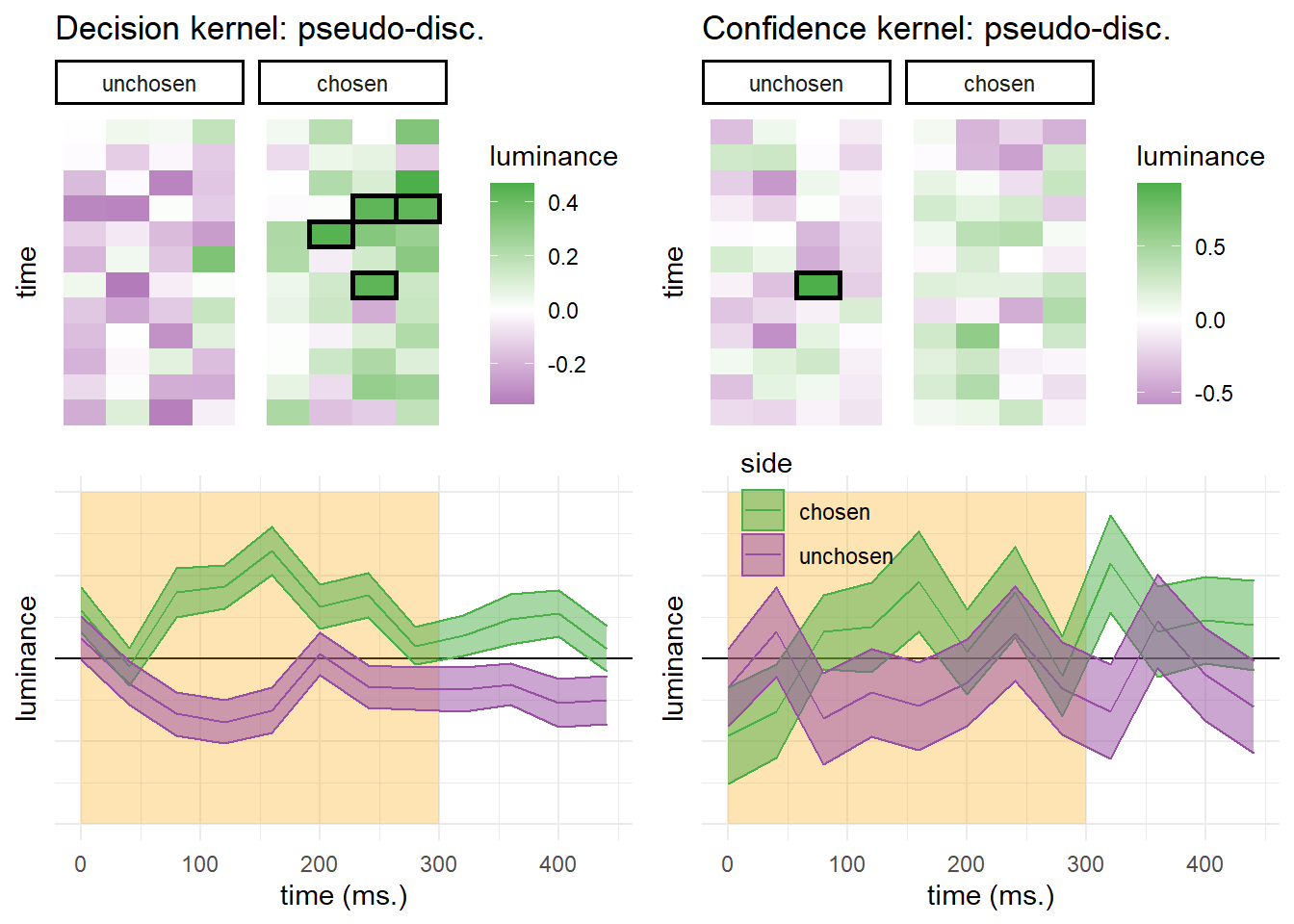

D.4.2 Exp. 2

Figure D.3: Decision and confidence pseudo-discrimination kernels, Experiment 2. Upper left: luminance in the “chosen” (green) and “unchosen” (purple) stimulus as a function of time and spatial position. Bottom left: decision kernel averaged across the four spatial positions. Upper right: confidence effects for motion energy in the “chosen” (green) and “unchosen” (purple) stimuli. Bottom right: confidence effects averaged across the four spatial positions. Shaded areas represent the the mean +- one standard error. The first 300 milliseconds of the trial are marked in yellow. Black frames denote significance at the 0.05 level controlling for family-wise error rate for 48 comparisons.

Similar to decision kernels in Exp. 2, random fluctuations in luminance during the first 300 milliseconds of the stimulus had a significant effect on decision (\(t(101) = 6.68\), \(p < .001\)). However, in Exp. 2 this anslysis revealed no effect of luminance on decision confidence (\(t(99) = 1.36\), \(p = .178\)), and no positive evidence bias in confidence judgments (\(t(99) = -0.66\), \(p = .512\)).

D.5 Stimulus-dependent noise model

D.5.1 Discrimination

Generative model

Stimuli were represented as pairs of numbers, corresponding to the two sensory channels (e.g., right and left motion). One sensory channel transmitted pure noise, and one channel had additional signal in it. The signal channel was chosen randomly for each trial with equal probability.

\[\begin{equation} x^c_t \sim \begin{cases} \mathcal{N}(0,1), & \text{if signal}.\\ \mathcal{N}(1,1), & \text{if noise}.\\ \end{cases} \end{equation}\]

On top of the presented noise, we added perceptual noise to the stimulus. Importantly, this additional noise affected the decisions and confidence ratings of the simulated agent, but did not affect trial-wise estimates of stimulus energy for the reverse correlation analysis. The noise was channel specific, and its magnitude dependent on the magnitude of the underlying signal:

\[\begin{equation} \epsilon^c_t \sim \mathcal{N}(0,2^{x^c_t}) \end{equation}\]

\[\begin{equation} x'^c_t= x^c_t+\epsilon^c_t \end{equation}\]

Inference

The log likelihood ratio is computed to decide whether it is more likely that the signal was in channel 1 or 2.

\[\begin{equation} LLR=log(p([x'^1_t,x'^2_t]|stim=[x^s,x^n])-log(p([x'^1_t,x'^2_t]|stim=[x^n,x^s]) \end{equation}\]

\[\begin{equation} decision_t=\begin{cases} \text{1}, & \text{if } LLR>1.\\ \text{2}, & \text{else}. \end{cases} \end{equation}\]

\[\begin{equation} confidence_t = |LLR| \end{equation}\]

class Model:

def __init__(self, mu, sigma, noise_factor):

self.df = pd.DataFrame()

self.mu = mu

self.sigma = sigma

self.noise_factor = noise_factor

# if noise factor > 0, approximate density function

# with grid.

if noise_factor > 0:

X = np.arange(-100,100,0.1)

Xboundaries = np.arange(-100,100.1,0.1)-0.05

marginal_signal=[0]*len(X)

marginal_noise=[0]*len(X)

for x in X:

conditional = stats.norm(x,self.noise_factor**x).pdf(X);

prior_noise = stats.norm(self.mu[0],self.sigma[0]).pdf(x)

prior_signal = stats.norm(self.mu[1],self.sigma[1]).pdf(x)

marginal_noise = [p+conditional[i]*prior_noise

for i,p in enumerate(marginal_noise)];

marginal_signal = [p+conditional[i]*prior_signal

for i,p in enumerate(marginal_signal)];

self.signal_dist = stats.rv_histogram(

(np.array(marginal_signal),Xboundaries));

self.noise_dist = stats.rv_histogram(

(np.array(marginal_noise),Xboundaries));

# else, use normal distributions.

else:

self.signal_dist = stats.norm(self.mu[1],self.sigma[1]);

self.noise_dist = stats.norm(self.mu[0],self.sigma[0])

def runModel(self, num_trials):

# first, decide which is the true direction in each trial

# (p=0.5)

self.df['direction'] = ['r' if flip else 'l'

for flip in np.random.binomial(1,0.5,num_trials)]

self.getMotionEnergy()

self.extractLLR()

self.makeDecision()

self.rateConfidence()

self.df['correct'] = self.df.apply(lambda row:

row.direction==row.decision, axis=1)

#energy in chosen direction

self.df['E_c'] = self.df.apply(lambda row:

row.E_r if row.decision=='r'

else row.E_l, axis=1)

#energy in unchosen direction

self.df['E_u'] = self.df.apply(lambda row:

row.E_l if row.decision=='r'

else row.E_r, axis=1)

def runModelForSpecifiedValues(self,

specified_values,

repetitions=1):

# no direction here

self.df['direction'] =

['x']*len(specified_values)**2*repetitions

self.df['E_r'] =

specified_values*len(specified_values)*repetitions;

self.df['E_l'] = list(np.repeat(

specified_values,len(specified_values)))*repetitions;

# how it appears to subjects

if self.noise_factor>0:

self.df['E_ra'] = self.df.apply(lambda row: row.E_r +

np.random.normal(0, self.noise_factor**row.E_r),

axis=1);

self.df['E_la'] = self.df.apply(lambda row: row.E_l +

np.random.normal(0, self.noise_factor**row.E_l),

axis=1);

else:

self.df['E_ra']=self.df['E_r'];

self.df['E_la']=self.df['E_l'];

self.extractLLR()

self.makeDecision()

self.rateConfidence()

self.df['correct'] = self.df.apply(lambda row:

row.direction==row.decision, axis=1)

#energy in chosen direction

self.df['E_c'] = self.df.apply(lambda row:

row.E_r if row.decision=='r'

else row.E_l, axis=1)

#energy in unchosen direction

self.df['E_u'] = self.df.apply(lambda row:

row.E_l if row.decision=='r'

else row.E_r, axis=1)

def getMotionEnergy(self):

# sample the motion energy for left and right as a function of

# the true direction

self.df['E_r'] = self.df.apply(lambda row:

np.random.normal(self.mu[1],self.sigma[1])

if row.direction=='r'

else np.random.normal(self.mu[0],self.sigma[0]),

axis=1)

self.df['E_l'] = self.df.apply(lambda row:

np.random.normal(self.mu[1],self.sigma[1])

if row.direction=='l'

else np.random.normal(self.mu[0],self.sigma[0]),

axis=1)

# how it appears to subjects

if self.noise_factor>0:

self.df['E_ra'] = self.df.apply(lambda row: row.E_r +

np.random.normal(0, self.noise_factor**row.E_r),

axis=1);

self.df['E_la'] = self.df.apply(lambda row: row.E_l +

np.random.normal(0, self.noise_factor**row.E_l),

axis=1)

else:

self.df['E_ra']=self.df['E_r'];

self.df['E_la']=self.df['E_l'];

def extractLLR(self):

# extract the Log Likelihood Ratio (LLR)

#log(p(Er|r))-log(p(Er|l)) + log(p(El|r))-log(p(El|l))

self.df['LLR'] = self.df.apply(lambda row:

np.log(self.signal_dist.pdf(row.E_ra))-

np.log(self.noise_dist.pdf(row.E_ra)) +

np.log(self.noise_dist.pdf(row.E_la))-

np.log(self.signal_dist.pdf(row.E_la)), axis=1)

def makeDecision(self):

# we assume that our participant chooses the direction associated

# with higher likelihood

self.df['decision'] = self.df.apply(lambda row:

'r' if row.LLR>0 else 'l',

axis=1)

def rateConfidence(self):

# and rates their confidence in proportion to the absolute LLR

self.df['confidence'] = abs(self.df['LLR'])D.5.2 Detection

Generative model

Similar to detection, except that on half of the trials both channels transmitted noise only.

Inference

The log likelihood ratio is computed to decide whether it is more likely that the signal was present or absent.

\[\begin{equation} p(x|signal)= 0.5 \times p([x'^1_t,x'^2_t]|stim=[x^s,x^n]) \\ + 0.5 \times p([x'^1_t,x'^2_t]|stim=[x^n,x^s]) \end{equation}\]

\[\begin{equation} p(x|noise)= p([x'^1_t,x'^2_t]|stim=[x^n,x^n]) \end{equation}\]

\[\begin{equation} LLR=log(p(x|signal)) - log(p(x|noise)) \end{equation}\]

\[\begin{equation} decision_t=\begin{cases} \text{1}, & \text{if } LLR>1.\\ \text{2}, & \text{else}. \end{cases} \end{equation}\]

\[\begin{equation} confidence_t = |LLR| \end{equation}\]

class DetectionModel(Model):

def runModel(self, num_trials):

# first, decide which is the true direction in each trial

#(p=0.5)

self.df['direction'] = ['r' if flip else 'l'

for flip in np.random.binomial(1,0.5,num_trials)]

# decide whether motion is present or absent.

self.df['motion'] = ['p' if flip else 'a'

for flip in np.random.binomial(1,0.5,num_trials)]

self.getMotionEnergy()

self.extractLLR()

self.makeDecision()

self.rateConfidence()

self.df['correct'] = self.df.apply(lambda row:

row.motion==row.decision,

axis=1)

#energy in true direction

self.df['E_t'] = self.df.apply(lambda row:

row.E_r if row.direction=='r'

else row.E_l,

axis=1)

#energy in opposite direction

self.df['E_o'] = self.df.apply(lambda row:

row.E_l if row.direction=='r'

else row.E_r,

axis=1)

def runModelForSpecifiedValues(self, specified_values, repetitions=1):

# no direction/motion here

self.df['direction'] =

['x']*len(specified_values)**2*repetitions

self.df['motion'] =

['x']*len(specified_values)**2*repetitions

self.df['E_r'] =

specified_values*len(specified_values)*repetitions;

self.df['E_l'] = list(np.repeat(

specified_values,len(specified_values)))*repetitions;

# how it appears to subjects

if self.noise_factor>0:

self.df['E_ra'] = self.df.apply(lambda row: row.E_r +

np.random.normal(0, self.noise_factor**row.E_r),

axis=1);

self.df['E_la'] = self.df.apply(lambda row: row.E_l +

np.random.normal(0, self.noise_factor**row.E_l),

axis=1)

else:

self.df['E_ra']=self.df['E_r'];

self.df['E_la']=self.df['E_l'];

self.extractLLR()

self.makeDecision()

self.rateConfidence()

self.df['correct'] =

self.df.apply(lambda row:

row.motion==row.decision, axis=1)

def getMotionEnergy(self):

# sample the motion energy for left and right as a function of

# the true direction

self.df['E_r'] = self.df.apply(lambda row:

np.random.normal(self.mu[1],self.sigma[1])

if row.direction=='r' and row.motion=='p'

else np.random.normal(self.mu[0],self.sigma[0]),

axis=1)

self.df['E_l'] = self.df.apply(lambda row:

np.random.normal(self.mu[1],self.sigma[1])

if row.direction=='l' and row.motion=='p'

else np.random.normal(self.mu[0],self.sigma[0]),

axis=1)

# how it appears to subjects

if self.noise_factor>0:

self.df['E_ra'] = self.df.apply(lambda row: row.E_r +

np.random.normal(0, self.noise_factor**row.E_r),

axis=1);

self.df['E_la'] = self.df.apply(lambda row: row.E_l +

np.random.normal(0, self.noise_factor**row.E_l),

axis=1)

else:

self.df['E_ra']=self.df['E_r'];

self.df['E_la']=self.df['E_l'];

def extractLLR(self):

self.df['LLR'] = self.df.apply(lambda row:

np.log(0.5*self.signal_dist.pdf(row.E_ra)*

self.noise_dist.pdf(row.E_la) +

0.5*self.signal_dist.pdf(row.E_la)*

self.noise_dist.pdf(row.E_ra)) -

np.log(self.noise_dist.pdf(row.E_la) *

self.noise_dist.pdf(row.E_ra)),

axis=1)

def makeDecision(self):

# we assume that our participant just chooses the option

# associated with higher likelihood

self.df['decision'] = self.df.apply(lambda row:

'p' if row.LLR>0

else 'a',

axis=1)

def rateConfidence(self):

# and rates their confidence in proportion to the absolute

# LLR

self.df['confidence'] = abs(self.df['LLR'])D.5.3 Effects of evidence on decision and confidence: Exp. 2 and 3

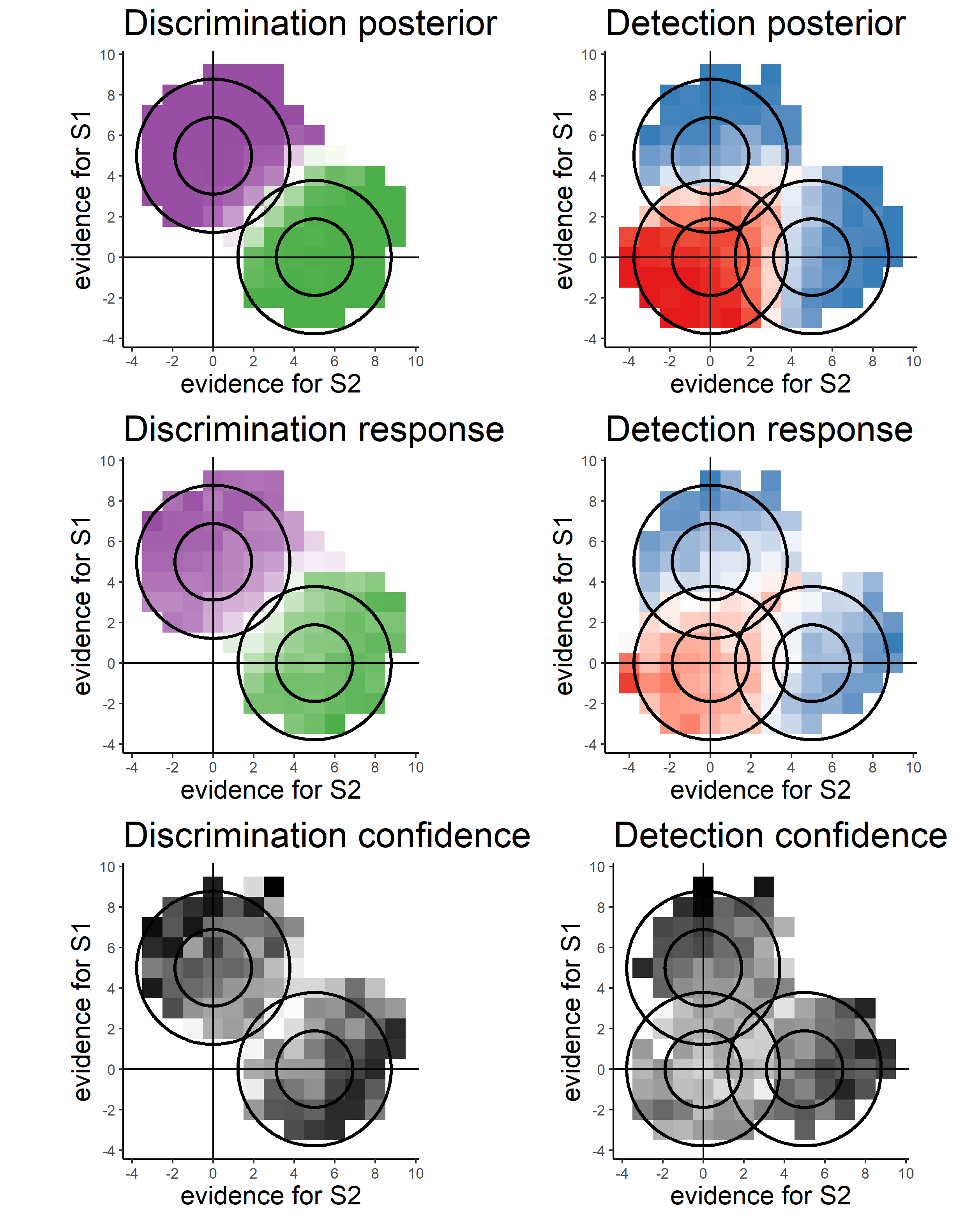

To compare participants’ empirical behaviour to our model simulations, we plotted optimal behaviour, participants’ responses, and confidence in correct responses, as a function of perceptual evidence in a two-dimensional representational space. First, for each trial we extracted mean luminance (minus background luminance) in the first 300 milliseconds in the right and left stimuli. These numbers were rounded to the closest integer. For each tuple of such integers, we extracted the posterior probability for stimulus category (Fig. D.4, top row), participants’ empirical discrimination and detection decisions (middle row), and participants’ subjective confidence in correct responses (bottom row).

Figure D.4: Top row: posterior probability of stimulus category given perceptual evidence for discrimination (left) and detection (right). Middle row: decision probability as a function of perceptual evidence. Bottom row: mean confidence in correct responses as a function of perceptual evidence.