Chapter 3 Evidence weightings in confidence judgments for detection and discrimination

Matan Mazor, Lucie Charles, Roni Or Maimon Mor & Stephen M. Fleming

In Chapters 1 and 2 I examined inference about absence and its relation to self-modelling in visual search, where a target is present or absent in an array of distractors. In this Chapter, I examine inference about absence in a near-threshold detection setting, where the location of the target is known and no distractors are present. Previous studies of near-threshold discrimination revealed a positive evidence bias (PEB) in discrimination confidence: confidence in perceptual decisions is more sensitive to evidence in support of the decision than to conflicting evidence. Recent theoretical proposals suggest that a PEB is due to observers adopting a detection-like strategy when rating their confidence, one that has functional benefits for metacognition in real-world settings where detectability and discriminability often go hand in hand. In three experiments (one lab-based and two online) we first successfully replicate a PEB in discrimination confidence. We then show that a PEB is observed in detection decisions, where participants report the presence or absence of a stimulus, regardless of its identity. We discuss our findings in relation to models that account for a positive evidence bias as emerging from a confidence-specific heuristic, and alternative models where decision and confidence are generated by the same, Bayes-rational process.

3.1 Introduction

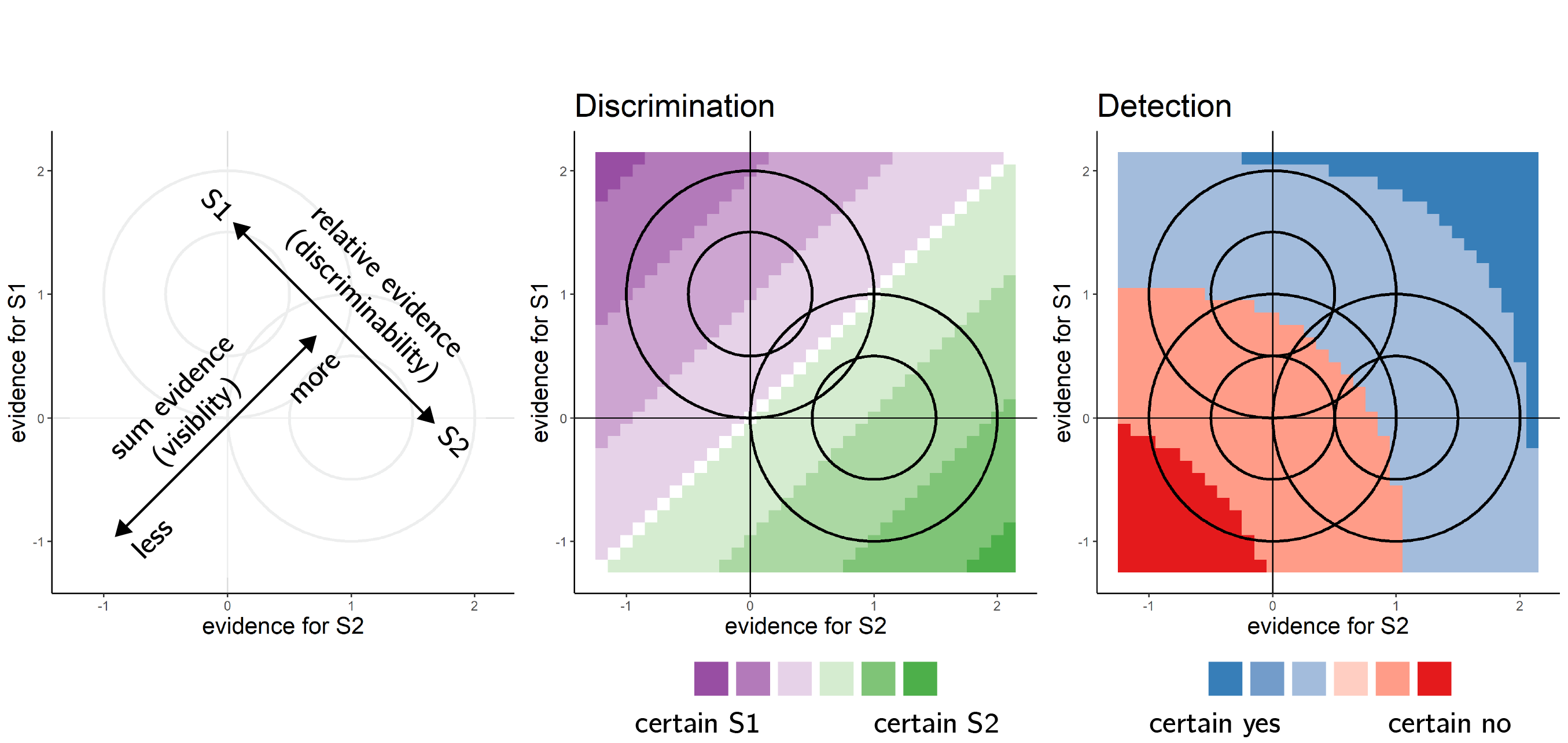

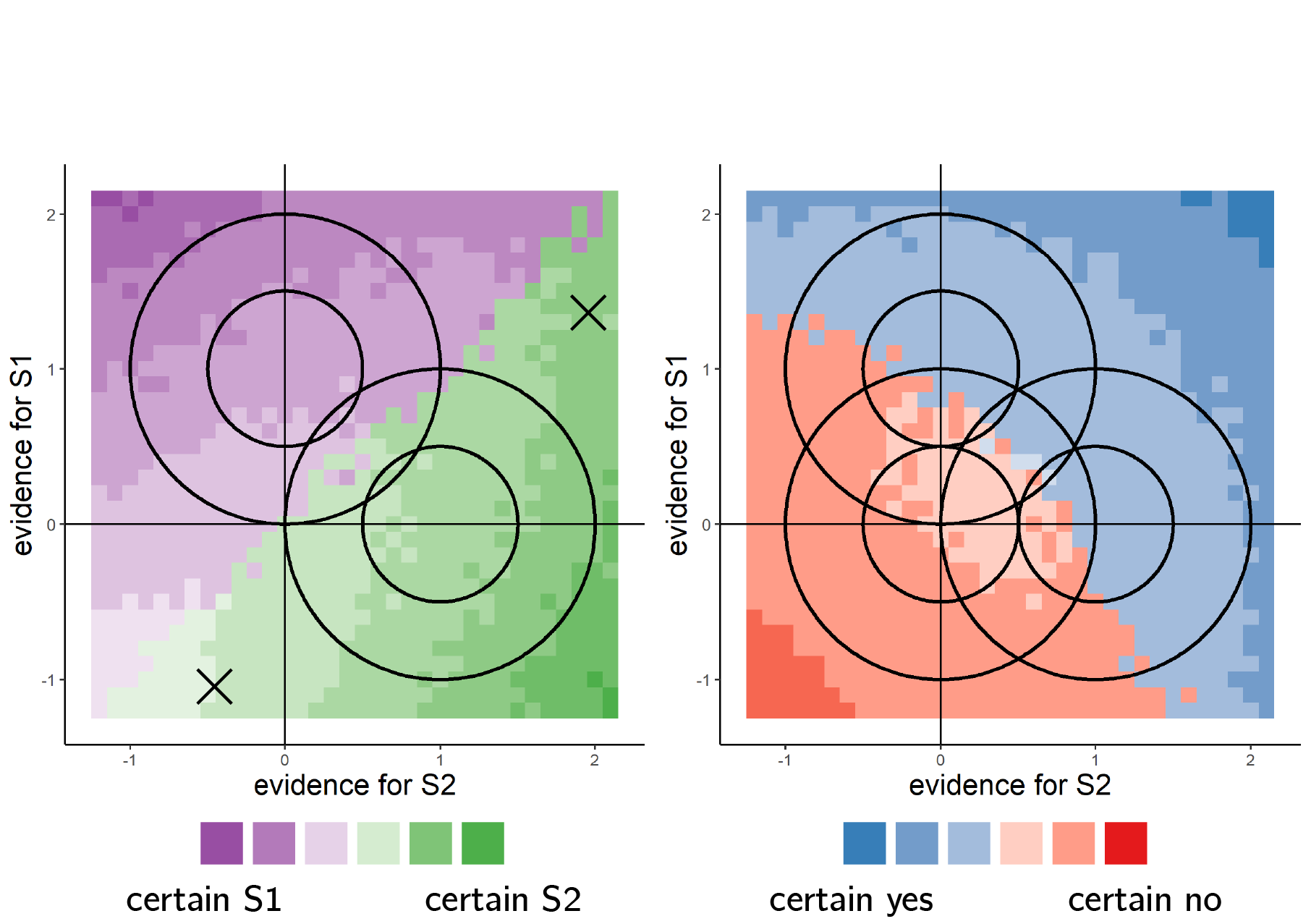

When considering two alternative hypotheses, the probability of a chosen hypothesis to be correct is not only a function of the likelihood of observations under the chosen hypothesis, but also under the unchosen one. For example, when deciding that a random dot display was drifting to the right and not to the left, confidence should not only positively weigh motion energy to the right (positive evidence), but also negatively weigh motion energy to the left (negative evidence). However, when rating their subjective confidence, subjects place disproportional weight on evidence in favour of the choice, giving rise to a positive evidence bias (Koizumi, Maniscalco, & Lau, 2015; Peters et al., 2017; Rollwage et al., 2020; Samaha & Denison, 2020; Sepulveda et al., 2020; Zylberberg, Barttfeld, & Sigman, 2012). Put differently, confidence ratings in discrimination are sensitive not only to the relative evidence of the chosen hypothesis compared with the unchosen one (also termed balance of evidence), but also to the sum evidence for the two hypotheses [which for perceptual decisions is often related to visibility; see Fig. 3.1, left panel; Rausch, Hellmann, & Zehetleitner (2018)].

Figure 3.1: Discrimination and detection in a two-dimensional Signal Detection Theory model. Left: in a two-dimensional SDT model, percepts \(e\) are sampled from one of two Gaussian distributions (here centered at (0,1) and (1,0)). We define relative evidence as \(e_{S1}-e_{S2}\) and sum evidence as \(e_{S1}+e_{S2}\). Circles represent cross-sections of two-dimensional distributions. Center and Left: response and confidence accuracy are maximized when based on a log-likelihood ratio for the two stimulus categories. Center: in discrimination, this yields optimal decision and confidence criteria that are based on relative evidence (distance from the main diagonal), irrespective of sum evidence. Right: in detection, percepts in the absence of a stimulus are sampled from a Gaussian distribution centered at (0,0). This yields optimal decision and confidence that are based on a non-linear interaction between relative and sum evidence.

To account for this apparently irrational discounting of incongruent evidence in confidence formation, Maniscalco, Peters, & Lau (2016) point out that outside of a lab setting, representational spaces are so high-dimensional that keeping track of evidence for every possible stimulus category is not feasible. For example, to be confident that an object is an apple, one would have to incorporate evidence for this object not being an orange, a banana, a book and a ferret, among an infinite many other unsupported hypotheses. To resolve this engineering challenge, metacognitive systems may have evolved to weigh evidence for the chosen hypothesis only, while ignoring conflicting evidence. This is similar to rating confidence not in the identity of a stimulus relative to other hypothetical stimuli, but in the presence of a stimulus relative to absence. Such a strategy is reasonable, as in Signal Detection space, samples that are farther away from the origin (high visibility) are on average farther away from the discrimination criterion (high discriminability). This strategy is then carried over to the lab, where decisions are made in low-dimensional representational spaces, and where keeping track of evidence for the two alternative stimulus categories is in fact feasible.

A more recent model identified the origin of this response-congruent heuristic not in the curse of dimensionality, but in the variance structure of perceptual evidence (Miyoshi & Lau, 2020). In a series of simulations, the authors augmented a bidimensional Signal Detection model with realistic assumptions about the sensory encoding of signal and noise, most importantly that the variance of signal tends to be higher than that of noise. In these settings, a Response Congruent Evidence (RCE) heuristic provided more accurate confidence judgments, meaning ones that are more aligned with objective accuracy, than did a Balance of Evidence (BE) heuristic. Again, this model implies that adopting a detection-like strategy when rating one’s confidence might have functional benefits for metacognition.

Notably, both models imply a link between confidence in discrimination, and detection judgments about the presence or absence of a stimulus. In a detection setting with multiple possible targets, the likelihood ratio between stimulus presence and absence is more sensitive to positive evidence for the detected stimulus compared to evidence for the absence of other, undetected stimuli (see Fig. 3.1, right panel). Perhaps surprisingly, however, despite several recent studies finding that discrimination confidence is detection-like, there has been limited focus on the complementary question: do detection decisions share features of discrimination confidence, such as a positive evidence bias? In other words, when faced with a detection task where targets are drawn from two stimulus classes, would detection decisions be sensitive to sum evidence (like discrimination confidence), or to the relative evidence for presence for one category over the other? Moreover, little is known about confidence in these detection responses: would confidence in the presence of a target stimulus be susceptible to the same positive evidence bias as confidence in stimulus type? Finally, we asked whether detection confidence ratings would be sensitive to some form of positive evidence bias not only in decisions about target presence, but also in decisions about target absence.

To examine these questions, we conducted three experiments: one lab-based (N=10, 1800 trials per participant) and two online (N=102/100, 112/168 trials per participant). Participants performed discrimination and detection decisions on noisy stimuli, and rated their confidence in their decisions. Using reverse correlation analysis, we measured the influence of random fluctuations in stimulus energy on both responses and confidence ratings, and tested for the existence of processing asymmetries between detection ‘yes’ and ‘no’ responses [in response time, general confidence, and metacognitive sensitivity; Meuwese, Loon, Lamme, & Fahrenfort (2014); Mazor, Friston, & Fleming (2020); Kellij, Fahrenfort, Lau, Peters, & Odegaard (2021); Mazor, Moran, & Fleming (2021)]. In all three experiments, we replicated previous findings of a positive evidence bias in confidence in discrimination of motion direction and relative luminance (Zylberberg, Barttfeld, & Sigman, 2012). In contrast, our understanding of decision and confidence formation in detection has evolved and changed following each experiment, as evident in our pre-registration documents. When considering the results of all three experiments together, we conclude that, similar to discrimination confidence, detection decisions and confidence ratings are also sensitive to a positive evidence bias (we use the word bias here to mean a deviation from equal weighting of positive and negative evidence, and not in the sense of a deviation from rationality). We discuss our findings with respect to recent theoretical proposals regarding the origin of a positive evidence bias in discrimination confidence.

3.2 Experiment 1

3.2.1 Methods

3.2.1.1 Participants

The research complied with all relevant ethical regulations, and was approved by the Research Ethics Committee of University College London (study ID number 1260/003). 10 participants were recruited via the UCL’s psychology subject pool, and gave their informed consent prior to their participation. Each participant performed four sessions of 600 trials each, in blocks of 100 trials. Sessions took place on different days and consisted of 3 discrimination blocks interleaved with 3 detection blocks.

3.2.1.2 Experimental procedure

The experimental procedure for Exp. 1 largely followed the procedure described in Zylberberg, Barttfeld, & Sigman (2012), Exp. 1. Participants observed a random-dot kinematogram for a fixed duration of 700 ms. In discrimination trials, the direction of motion was one of two opposite directions with equal probability, and participants reported the observed direction by pressing one of two arrow keys on a standard keyboard. In detection blocks participants reported whether there was coherent motion by pressing one of two arrow keys on a standard keyboard. In half of the detection trials dots moved coherently to one of two opposite directions, and in the other half they moved randomly.

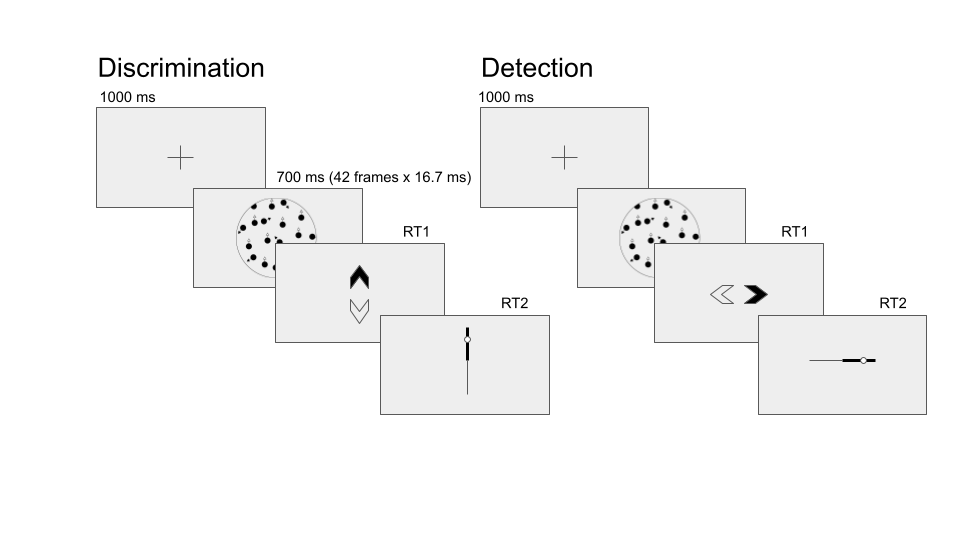

In both detection and discrimination blocks, following a decision participants indicated their confidence in their decision. Confidence was reported on a continuous scale ranging from chance to complete certainty. To avoid systematic response biases affecting confidence reports, the orientation (vertical or horizontal) and polarity (e.g., right or left) of the scale was set to agree with the type 1 response. For example, following a down arrow press, a vertical confidence bar was presented where ‘guess’ is at the center of the screen and ‘certain’ appeared at the lower end of the scale (see Fig. 3.2).

To control for response requirements, for five subjects the dots moved to the right or to the left, and for the other five subjects they moved upward or downward. The first group made discrimination judgments with the right and left keys and detection judgments with the up and down keys, and this mapping was reversed for the second group. The number of coherently moving dots (‘motion coherence’) was adjusted to maintain performance at around 70% accuracy for detection and discrimination tasks independently. This was achieved by measuring mean accuracy after every 20 trials, and adjusting coherence by a step of 3% if accuracy fell below 60% or went above 80%.

Stimuli for discrimination blocks were generated using the exact same procedure reported in Zylberberg, Barttfeld, & Sigman (2012)1. Trials started with a presentation of a fixation cross for one second, immediately followed by stimulus presentation. The stimulus consisted of 152 white dots (diameter = 0.14°), presented within a 6.5° circular aperture centered on the fixation point for 700 milliseconds (42 frames, frame rate = 60 Hz). Dots were grouped in two sets of 56 dots each. Every other frame, the dots of one set were replaced with a new set of randomly positioned dots. For a coherence value of \(c'\), a proportion of \(c'\) of the dots from the second set moved coherently in one direction by a fixed distance of 0.33°, while the remaining dots in the set moved in random directions by a fixed distance of 0.33°. On the next update, the sets were switched, to prevent participants from tracing the position of specific dots. Frame-specific coherence values were sampled for each screen update from a normal distribution centred around the coherence value \(c\) with a standard deviation of 0.07, with the constraint that \(c'\) must be a number between 0 and 1.

Stimuli for detection blocks were generated using a similar procedure, with the only difference being that on a random half of the trials coherence was set to 0%, without random sampling of coherence values for different frames (see Fig. 1).

At the end of each experimental block (100 trials), participants estimated the number of correct responses they have made.

Figure 3.2: Task design for Experiment 1. In both discrimination and detection blocks, participants viewed 700 milliseconds of a random dot motion array, after which they made a keyboard response to indicate their decision (motion direction in discrimination, signal absence or presence in detection), followed by a continuous confidence report using the mouse. 5 participants viewed vertically moving dots and indicated their detection responses on a horizontal scale, and 5 participants viewed horizontally moving dots and indicated their detection responses on a vertical scale.

3.2.2 Randomization

The order and timing of experimental events was determined pseudo-randomly by the Mersenne Twister pseudorandom number generator, initialized in a way that ensures registration time-locking (Mazor, Mazor, & Mukamel, 2019).

3.2.3 Analysis

Experiment 1 was pre-registered (pre-registration document is available here: https://osf.io/z2s93/). Our full pre-registered analysis of behavioural data is available in Appendix D.

Reverse correlation analysis

For the reverse correlation analysis, we followed a procedure similar to the one described in Zylberberg, Barttfeld, & Sigman (2012). For each of the four directions (right, left, up and down), we applied two spatiotemporal filters to the frames of the dot motion stimuli as described in previous studies (Adelson & Bergen, 1985; Zylberberg, Barttfeld, & Sigman, 2012). The outputs of the two filters were squared and summed, resulting in a three-dimensional matrix with motion energy in a specific direction as a function of x, y, and time. We then took the mean of this matrix across the x and y dimensions to obtain an estimate of the overall temporal fluctuations in motion energy in the selected direction. Additionally, for every time point we extracted the variance along the x and y dimensions, to obtain a measure of temporal fluctuations in spatial variance. Using this filter, we obtained estimates of temporal fluctuations in the mean and variance of motion energy for upward, downward, leftward and rightward motion within each trial. Given a high correlation between our mean and variance estimates, we focused our analysis on the mean motion energy.

In order to distill random fluctuations in motion energy from mean differences between stimulus categories, we subtracted the mean motion energy from trial-specific motion energy vectors. The mean motion energy vectors were extracted at the group level, separately for each motion coherence level and as a function of motion direction. We chose this approach instead of the linear regression approach used by Zylberberg, Barttfeld, & Sigman (2012) in order to control for nonlinear effects of coherence on motion energy.

Statistical inference

Statistics were extracted separately for each participant, and group-level inference was then performed on the first-order statistics. T-test Bayes factors were used to quantify the evidence for the null when appropriate, using a Jeffrey-Zellner-Siow Prior for the null distribution, with a unit prior scale (Rouder, Speckman, Sun, Morey, & Iverson, 2009).

3.2.4 Results

3.2.4.1 Response accuracy

Overall proportion correct was 0.74 in the discrimination and 0.72 in the detection task. Performance for discrimination was significantly higher than for detection (\(M_d = 0.02\), 95% CI \([0.00\), \(0.04]\), \(t(9) = 2.43\), \(p = .038\)). This difference in task performance reflected a slower convergence of the staircasing procedure for the discrimination task during the first session. When discarding all data from the first session and analyzing only data from the last three sessions (1800 trials per participant), task performance was equated between the two tasks at the group level (\(M_d = 0.00\), 95% CI \([-0.02\), \(0.02]\), \(t(9) = -0.05\), \(p = .962\); \(\mathrm{BF}_{\textrm{01}} = 3.24\)). In order to avoid confounding differences between discrimination and detection decision and confidence profiles with more general task performance effects, the first session was excluded from all subsequent analyses.

3.2.4.2 Overall properties of response time and confidence distributions

In detection, participants were more likely to respond ‘yes’ than ‘no’ (mean proportion of ‘yes’ responses: \(M = 0.59\), 95% CI \([0.53\), \(0.64]\), \(t(9) = 3.45\), \(p = .007\)). We did not observe a consistent response bias for the discrimination data (mean proportion of ‘rightward’ or ‘upward’ responses: \(M = 0.52\), 95% CI \([0.47\), \(0.57]\), \(t(9) = 1.00\), \(p = .344\)).

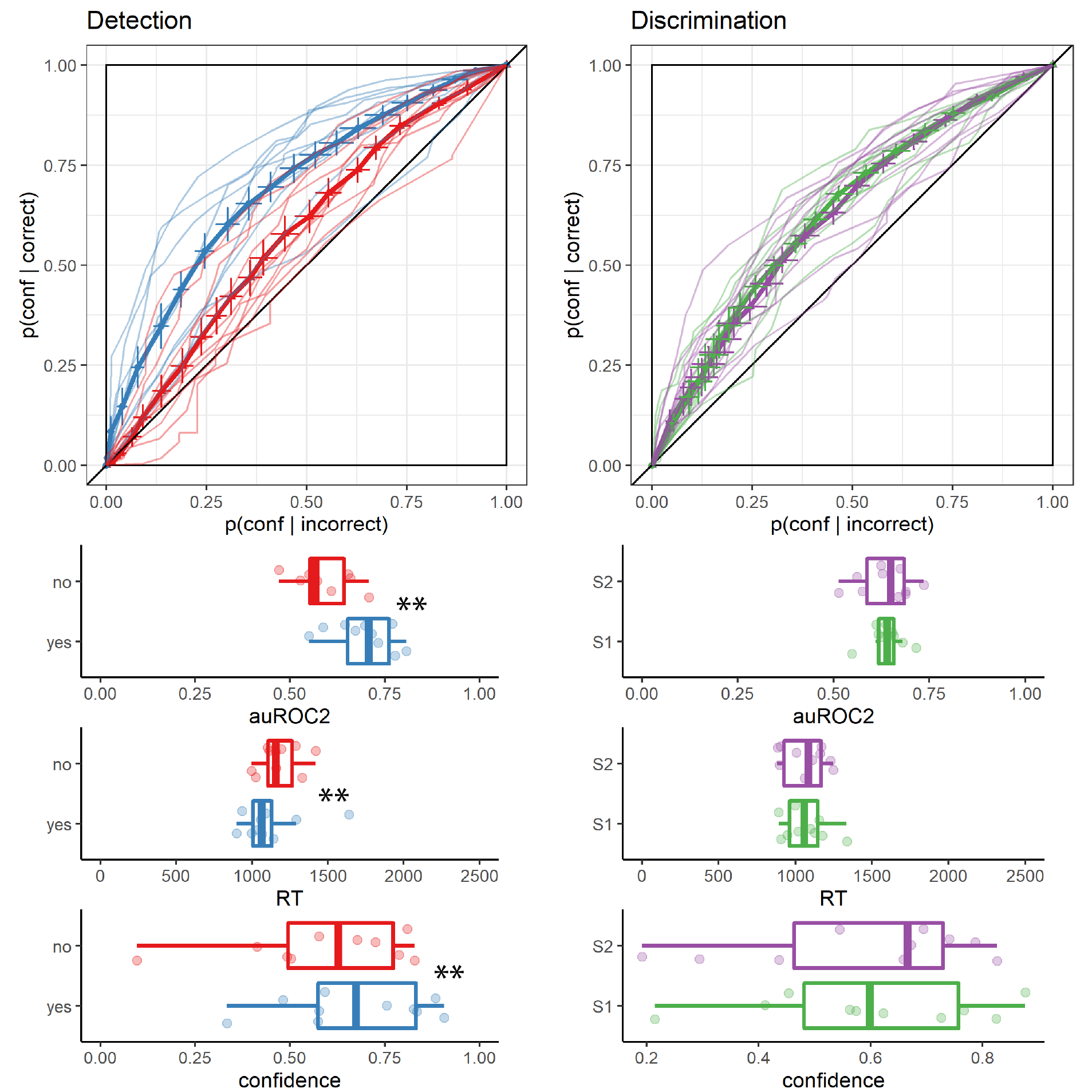

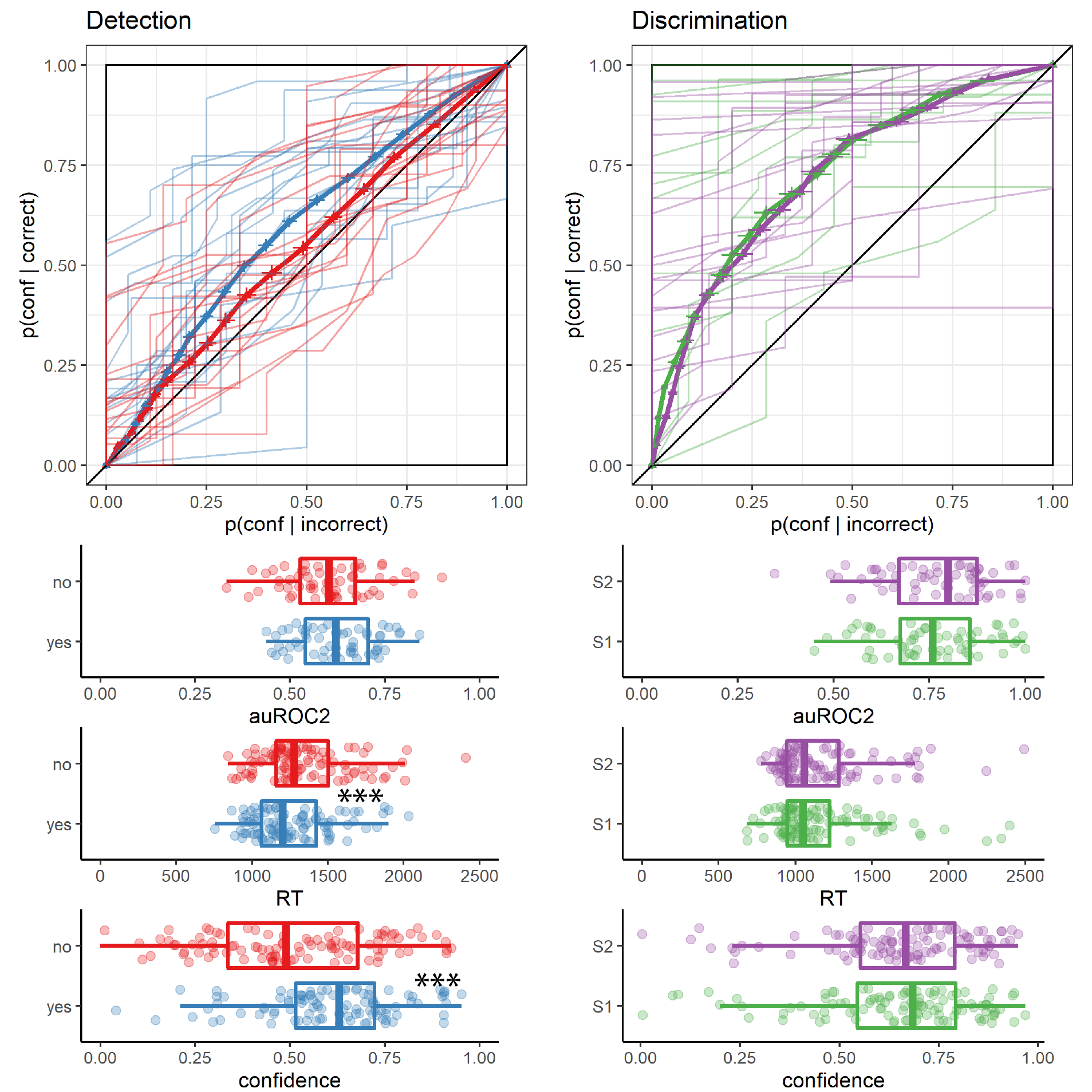

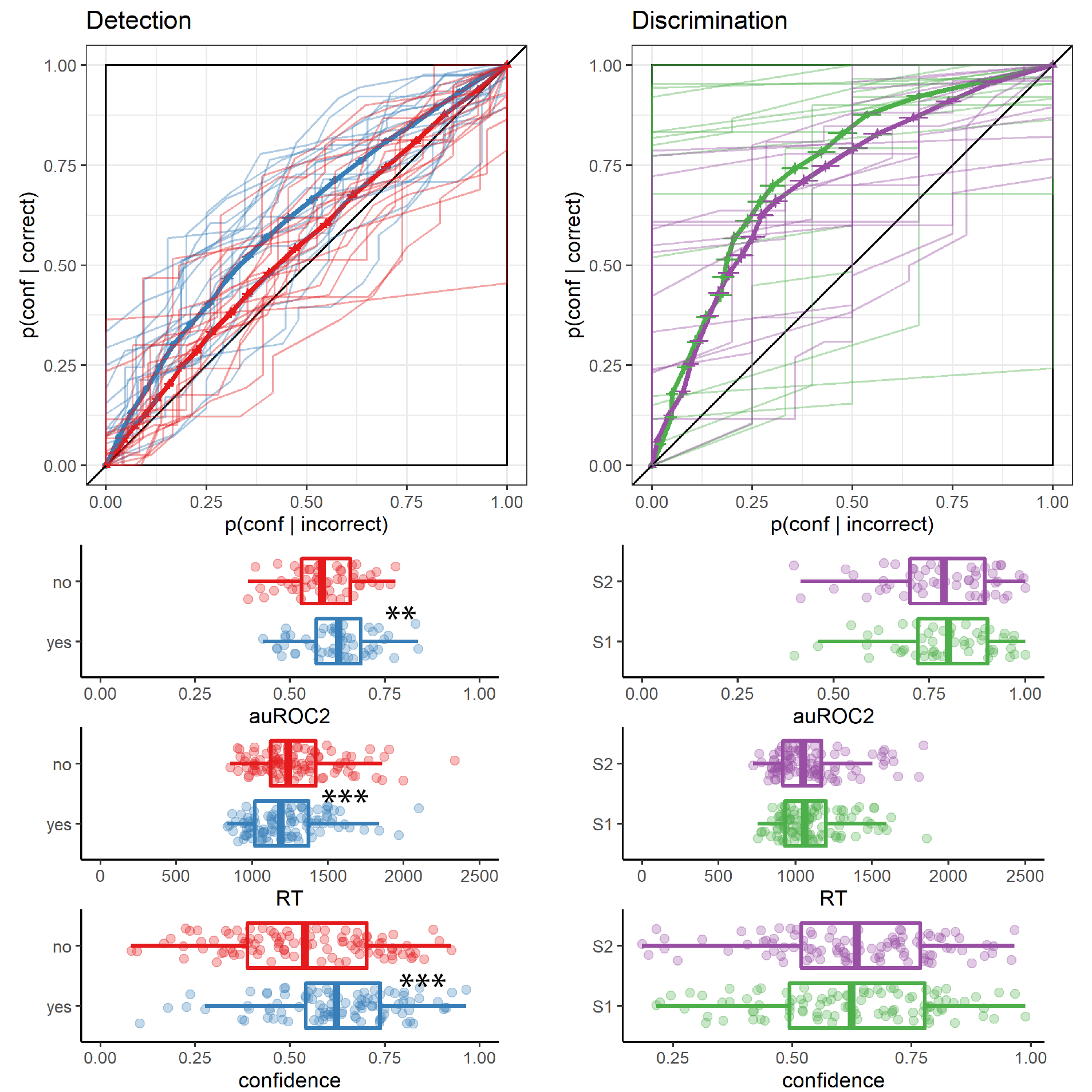

Replicating previous studies (Kellij, Fahrenfort, Lau, Peters, & Odegaard, 2021; Mazor, Friston, & Fleming, 2020; Mazor, Moran, & Fleming, 2021; Meuwese, Loon, Lamme, & Fahrenfort, 2014), we find the typical asymmetries between detection ‘yes’ and ‘no’ responses in response time, overall confidence, and the alignment between subjective confidence and objective accuracy (also termed metacognitive sensitivity, here measured as the area under the response-conditional type 2 ROC curve; see Fig. 3.3). ‘No’ responses were slower compared to ‘yes’ responses (median difference: 85.37 ms), and accompanied by lower levels of subjective confidence (mean difference of 0.08 on a 0-1 scale). Metacognitive sensitivity was higher for detection ‘yes’ compared with detection ‘no’ responses (mean difference in area under the curve units: 0.11). No difference in response time, confidence, or metacognitive sensitivity was found between the two discrimination responses. For a detailed statistical analysis of these behavioural asymmetries see Appendix D.1.1.

Figure 3.3: Behavioural asymmetries in metacognitive sensitivity, response time, and overall confidence, in Exp. 1. Top row: Response conditional type 2 ROC curves for the two tasks and four responses in Exp. 1. The area under the type 2 ROC curve is a measure of metacognitive sensitivity, and the difference in areas between the two responses a measure of metacognitive asymmetry. Single-subject curves are presented in low opacity. Second, third, and fourth rows: distributions of the area under the type 2 ROC curve, median response time, and mean confidence for the four responses, across participants. Box edges and central lines represent the 25, 50 and 75 quantiles. Whiskers cover data points within four inter-quartile ranges around the median. Stars represent significance in a two-sided t-test: **: p<0.01, ***: p<0.001

3.2.4.3 Reverse Correlation

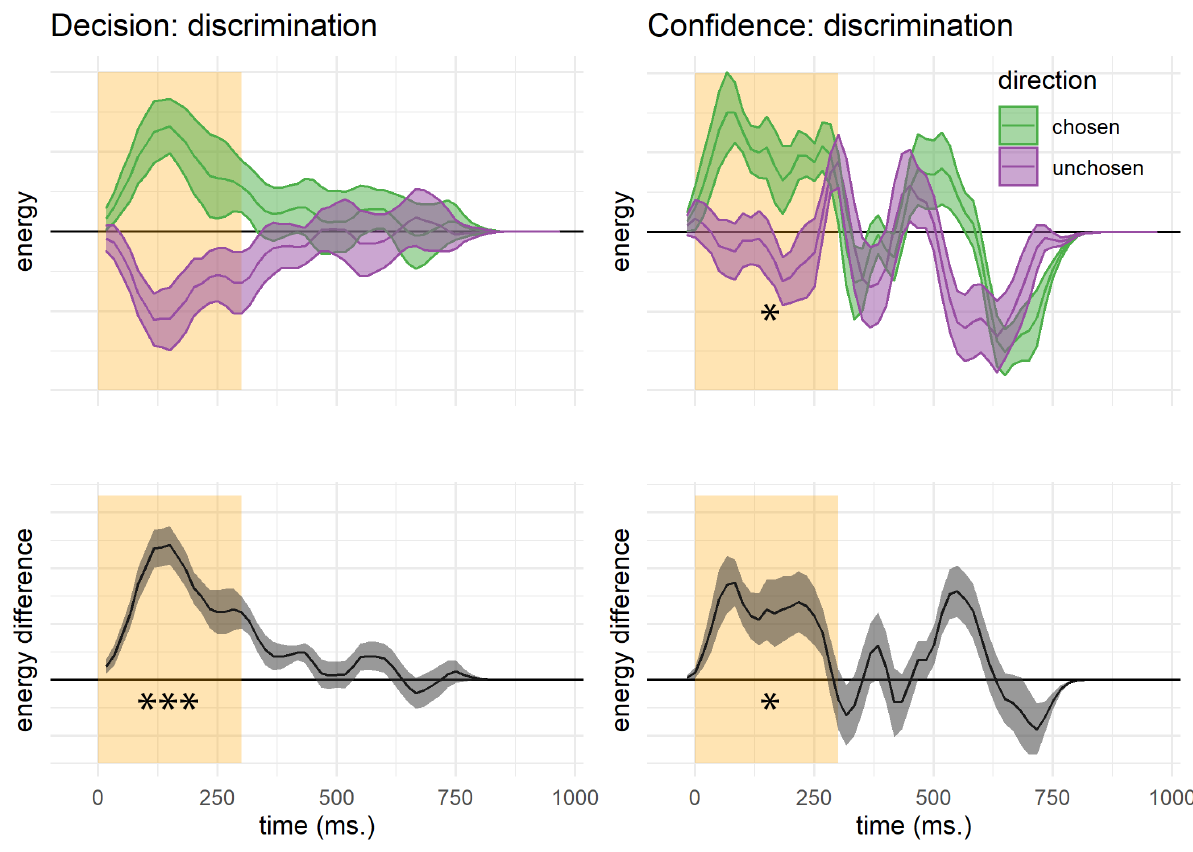

Random fluctuations in motion energy made it possible to apply reverse correlation to test which stimulus features are incorporated into decisions and confidence ratings in detection and discrimination. Following Zylberberg, Barttfeld, & Sigman (2012), our statistical analysis focused on the first 300 milliseconds after stimulus onset.

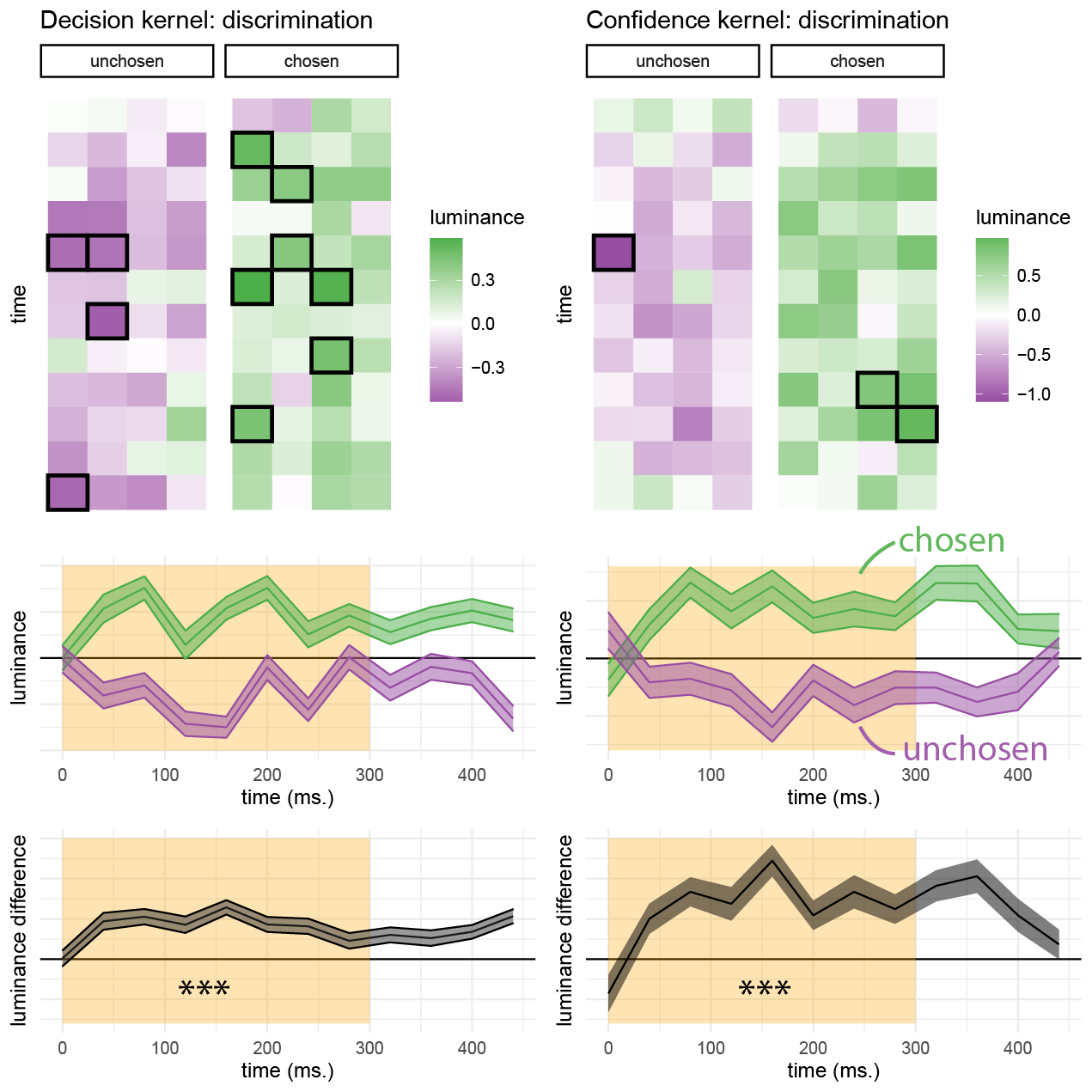

Discrimination

Figure 3.4: Decision and confidence discrimination kernels, Experiment 1. Upper left: motion energy in the chosen (green) and unchosen (purple) direction as a function of time. Lower left: a subtraction between energy in the chosen and unchosen directions. Upper right: confidence effects for motion energy in the chosen (green) and unchosen (purple) directions. Lower right: a subtraction between confidence effects in the chosen and unchosen directions. Shaded areas represent the the mean \(\pm\) one standard error. The first 300 milliseconds of the trial are marked in yellow. Stars represent significance in a two-sided t-test: *: p<0.05, **: p<0.01, ***: p<0.001. In the upper row, stars represent the significance of a positive evidence bias in evidence weighting.

Reverse correlation analysis quantified the effect of random fluctuations in motion energy on the probability of responding ‘right’ and ‘left’ (or ‘up’ and ‘down’), and the temporal dynamics of decision formation. Similar to the results obtained by Zylberberg, Barttfeld, & Sigman (2012)., participants’ decisions were sensitive to motion energy fluctuations during the first 300 milliseconds of the trial (\(t(9) = 7.73\), \(p < .001\); see Fig. 3.4, left panels). The symmetry of the two time courses around the x axis does not by itself entail an equal contribution of negative and positive evidence to the final decision, because due to the demeaning procedure, with enough trials negative and positive evidence at each time point should mathematically sum to zero. Instead, we tested the contribution of motion energy in the true and opposite directions of motion (defined with respect to the stimulus, and independently of decision) to discrimination decisions. Fluctuations in motion energy in both directions contributed significantly to discrimination decisions (\(t(9) = 8.38\), \(p < .001\)), with no significant difference between them (\(t(9) = -0.65\), \(p = .529\)). In other words, positive and negative evidence equally contributed to discrimination decisions, even when defined independently of the decision.

We then turned to the contribution of motion energy to subjective confidence ratings. The median confidence rating in each experimental session was used to split all motion energy vectors into four groups, according to decision (chosen or unchosen directions) and confidence level (high or low). Confidence kernels for the chosen and unchosen directions were then extracted by subtracting the mean low confidence vectors from the mean high confidence vectors for both the chosen and unchosen directions. We observed a significant effect of motion energy on confidence within this time window (\(t(19) = 2.52\), \(p = .021\); see Fig. 3.4, right panels). This effect was significantly stronger for motion energy in the chosen direction, compared to the unchosen direction (\(t(9) = 2.81\), \(p = .020\)). In other words, confidence ratings in the discrimination task were more sensitive to positive evidence than to negative evidence. This is a replication of the Positive Evidence Bias observed in Zylberberg, Barttfeld, & Sigman (2012).

Detection

Reverse correlation analysis for detection introduces a challenge: while ‘no’ responses reflect a belief in the absence of any coherent motion, ‘yes’ responses can result from detection of any type of coherent motion going in either direction (or both). We chose to have two possible motion directions in the detection task in order to prevent participants from making ‘no’ responses based on significant motion in an unexpected direction. While this choice ensured that participants cannot trivially accumulate evidence for absence, it also made the reverse correlation analysis more difficult, as we did not have full access to participants’ beliefs about the stimulus when they responded ‘yes.’

As a first approximation, we tested whether sum motion energy along the relevant dimension (horizontal or vertical), regardless of direction (up/down or left/right), affected the probability of a ‘yes’ response. Sum motion energy did not have a significant effect on participants’ responses during the first 300 milliseconds (\(t(9) = 1.23\), \(p = .249\)) or at any other time point. The effect of sum motion energy on decision confidence during the first 300 milliseconds was positive and marginally significant (\(t(9) = 2.15\), \(p = .060\)). Response-specific effects of sum motion energy on decision confidence were not significant for either response.

Detection signal trials

A failure to find significant effects of sum motion energy on detection decisions and confidence may be due to the fact that participants were sensitive to relative evidence (e.g., ‘more dots are moving to the right than to the left’) rather than to the sum motion along the relevant axis (‘many dots are moving to the right and to the left’). However, as we mention above, on any single trial, we cannot tell whether a ‘yes’ response means ‘I perceived coherent motion to the right’ or ‘I perceived coherent motion to the left.’ Instead, in order to approximate participants’ belief states during ‘yes’ responses, we focused only on trials in which coherent motion was presented in one of the two directions (signal trials). In these trials, we reasoned that a ‘yes’ response is most likely to reflect the detection of the true direction of motion. We therefore asked whether fluctuations in the true and opposite directions of motion contributed to detection decision and confidence. This was done by subtracting the motion energy vectors for ‘yes’ and ‘no’ responses in the true and opposite motion directions.

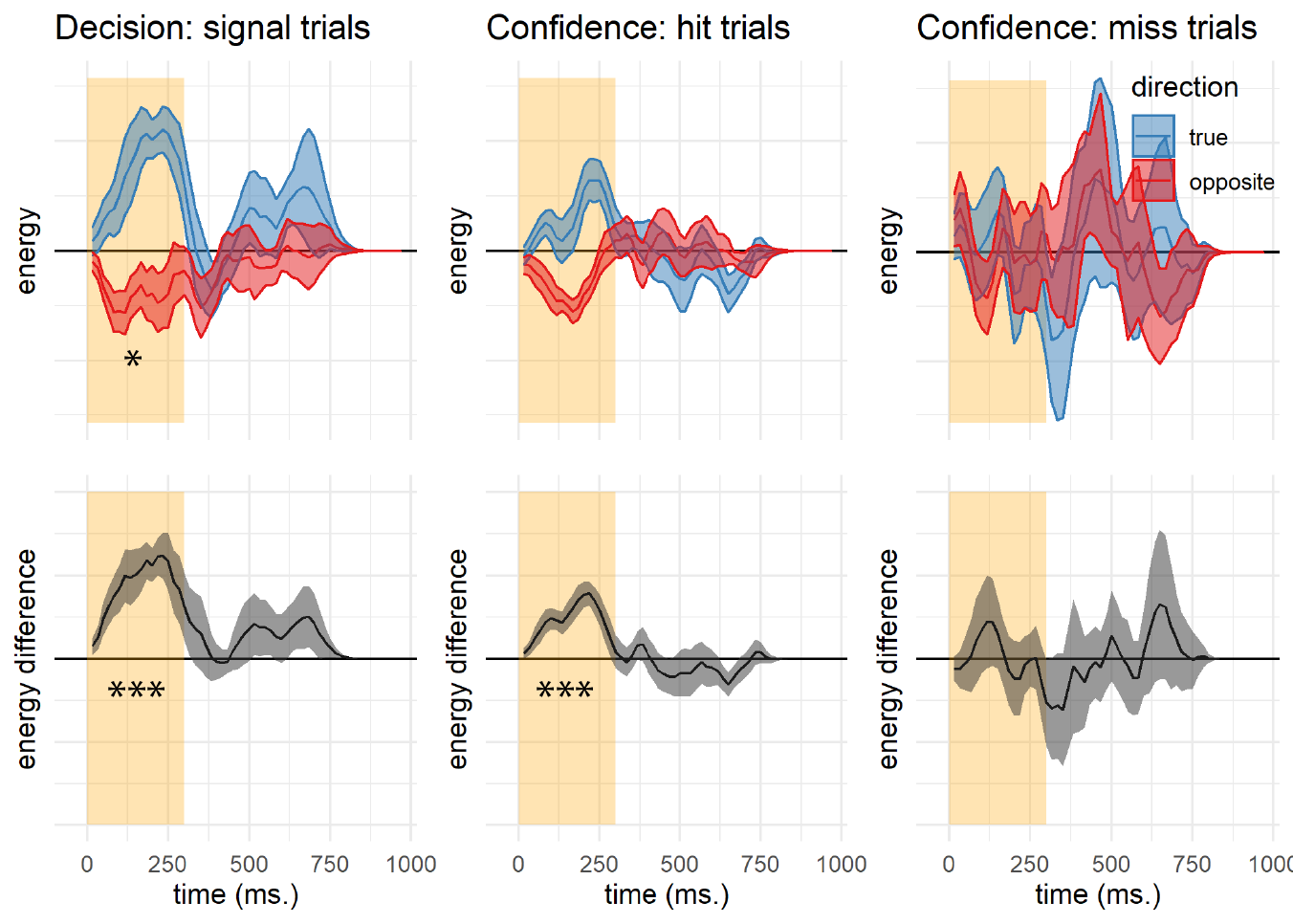

Similar to discrimination decisions, detection decisions were most sensitive to perceptual evidence in the first 300 milliseconds of the trial (see Fig. 3.5, left panels). However, in contrast to discrimination, an asymmetric evidence weighting was apparent in the decision itself: when deciding whether a stimulus contained coherent motion, participants were more sensitive to fluctuations in motion energy that strengthened the true direction of motion, in comparison to fluctuations that weakened motion in the opposite direction (\(t(9) = 2.31\), \(p = .046\)).

Motion fluctuations in the first 300 milliseconds of the trial also contributed to confidence in detection ‘yes’ responses (contrasting high and low confidence hit trials; \(t(9) = 6.13\), \(p < .001\)). But unlike in the discrimination task here we found no positive evidence bias in confidence ratings (\(t(9) = 0.11\), \(p = .913\)). To reiterate, while detection decisions were mostly sensitive to fluctuations in motion energy toward the true direction of motion, confidence in detection ‘yes’ responses was equally sensitive to fluctuations in the true and opposite directions of motion. Confidence in ‘miss’ trials was independent of motion energy (\(t(9) = 0.16\), \(p = .874\)). This was true for motion energy in the true direction of motion (\(t(9) = 0.12\), \(p = .908\)) as well as for motion energy in the opposite direction (\(t(9) = -0.08\), \(p = .941\)). However, and to anticipate the results of Exp. 3 presented below, we note that this equal weighting of positive and negative evidence in detection confidence was not replicated in an experiment designed to directly test this surprising result with an experimental manipulation.

Figure 3.5: Decision and confidence detection kernels in signal trials, Experiment 1. Upper left: difference in motion energy between ‘yes’ and ‘no’ responses in the true (blue) and opposite (red) directions as a function of time. Upper middle and right: confidence effects for motion energy in the true and opposite directions for ‘yes’ and ‘no’ responses, respectively. Lower panels: the subtraction of decision and confidence kernels for the true and opposite directions. Shaded areas represent the the mean \(\pm\) one standard error. The first 300 milliseconds of the trial are marked in yellow. Stars represent significance in a two-sided t-test: *: p<0.05, **: p<0.01, ***: p<0.001. In the upper row, stars represent the significance of a positive evidence bias in evidence weighting.

3.3 Experiment 2

In Exp. 1, we replicated previous observations of a positive evidence bias in discrimination confidence, such that evidence in support of a decision was given more weight in the construction of confidence than evidence against it. In contrast, in detection a positive evidence bias was apparent for the decision, but not for the confidence kernels. Equal weighting of positive and negative evidence suggests that detection confidence followed not the presence or absence of a signal, but the clarity of its identity. Furthermore, confidence in detection ‘no’ responses was not at all affected by fluctuations in motion energy.

In Exp. 2 we tested the robustness of these findings by employing a different type of stimuli (flickering patches) and mode of data collection (a ~10 minute online experiment). Our pre-registered objectives (documented here: https://osf.io/8u7dk/) were to first, replicate a positive evidence bias in discrimination, second, replicate the absence of a positive evidence bias in detection confidence ratings, and third, replicate the absence of an effect for either positive or negative evidence on confidence in ‘no’ judgments.

3.3.1 Methods

3.3.1.1 Participants

The research complied with all relevant ethical regulations, and was approved by the Research Ethics Committee of University College London (study ID number 1260/003). 147 participants (median reported age: 32; range: [19-78]) were recruited via Prolific (prolific.co), and gave their informed consent prior to their participation. They were selected based on their acceptance rate (>95%) and for being native English speakers. Following our pre-registration, we aimed to collect data until we had reached 100 included participants based on our pre-specified inclusion criteria (see https://osf.io/8u7dk/). Our final data set includes observations from 102 included participants. The entire experiment took around 10 minutes to complete. Participants were paid £1.25 for their participation, equivalent to an hourly wage of £7.5.

3.3.1.2 Experimental paradigm

The experiment was programmed using the jsPsych and P5 JavaScript packages (De Leeuw, 2015; McCarthy, 2015), and was hosted on a JATOS server (Lange, Kuhn, & Filevich, 2015). It consisted of two tasks (Detection and Discrimination) presented in separate blocks. A total of 56 trials of each task were delivered in 2 blocks of 28 trials each. The order of experimental blocks was interleaved, starting with discrimination.

The first discrimination block started after an instruction section, which included instructions about the stimuli and confidence scale, four practice trials and four confidence practice trials. Further instructions were presented before the second block. Instruction sections were followed by multiple-choice comprehension questions, to monitor participants’ understanding of the main task and confidence reporting interface. To encourage concentration, feedback was delivered at the end of the second and fourth blocks about overall performance and mean confidence in the task.

Importantly, unlike the lab-based experiment, there was no calibration of difficulty for the two tasks. The rationale for this is that in Exp. 1 perceptual thresholds for motion discrimination were highly consistent across participants, and staircasing took a long time to converge. Furthermore, in Exp. 1 we aimed to control for task difficulty, but this introduced differences between the stimulus intensity in detection and discrimination. To complement our findings, here we aimed to match stimulus intensity between the two tasks, and accept that task performance might vary.

Trial structure

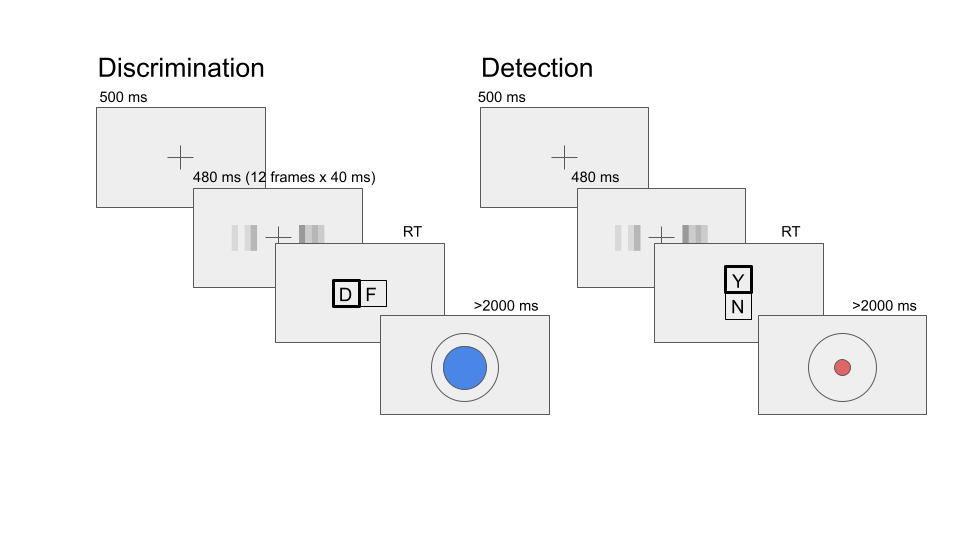

In discrimination blocks, trial structure closely followed Exp. 2 from Zylberberg, Barttfeld, & Sigman (2012), with a few adaptations. Following a fixation cross (500 ms), two sets of four adjacent vertical gray bars were presented as a rapid serial visual presentation (RSVP; 12 frames, presented at 25Hz), displayed to the left and right of the fixation cross (see Fig. 3.6). On each frame, the luminance of the bars was randomly sampled from a Gaussian distribution with a standard deviation of 10/255 units in the standard RGB 0-255 coordinate system. The average luminance of one set of bars was that of the background (128/255). The average luminance of the other set was 133/255, making this patch brighter on average. Participants then reported which of the two sets was brighter on average using the ‘D’ and ‘F’ keys on the keyboard. After their response, they rated their confidence on a continuous scale, by controlling the size of a colored circle with their mouse. High confidence was mapped to a big, blue circle, and low confidence to a small, red circle. To discourage hasty confidence ratings, the confidence rating scale stayed on the screen for at least 2000 milliseconds. Feedback about response accuracy was delivered after the confidence rating phase.

Figure 3.6: Task design for Experiment 2. In both tasks, participants viewed 480 milliseconds of two flicketing patches, after which they made a keyboard response to indicate which of the patches was brighter (discrimination) or whether any of the patches was brighter than the background (detection).

Detection blocks were similar to discrimination blocks, with the exception that decisions were made about whether the average luminance of either of the two sets was brighter than the gray background, or not. In ‘different’ trials, the luminance of the four bars in one of the sets was sampled from a Gaussian distribution with mean 133/255, and the luminance of the other set from a Gaussian distribution with mean 128/255. In ‘same’ trials, the luminance of both sets was sampled from a distribution centered at 128/255. Decisions in Detection trials were reported using the ‘Y’ and ‘N’ keys. Confidence ratings and feedback were as in the discrimination task.

3.3.2 Randomization

The order and timing of experimental events was determined pseudo-randomly by the Mersenne Twister pseudorandom number generator, initialized in a way that ensures registration time-locking (Mazor, Mazor, & Mukamel, 2019).

3.3.3 Results

3.3.3.1 Response accuracy

Overall proportion correct was 0.85 in the discrimination and 0.67 in the detection task. Performance for discrimination was significantly higher than for detection (\(M_d = 0.18\), 95% CI \([0.16\), \(0.20]\), \(t(101) = 18.01\), \(p < .001\)). Unlike in Exp. 1, where we aimed to control for task difficulty, here we decided to match stimulus intensity between the two tasks, so a difference between detection and discrimination performance was expected (Wickens, 2002, p. 104).

3.3.3.2 Overall properties of response and confidence distributions

Similar to Exp. 1, participants were more likely to respond ‘yes’ than ‘no’ in the detection task (mean proportion of ‘yes’ responses: \(M = 0.54\), 95% CI \([0.53\), \(0.56]\), \(t(101) = 4.78\), \(p < .001\)). We did not observe a consistent response bias in discrimination (mean proportion of ‘right’ responses: \(M = 0.50\), 95% CI \([0.48\), \(0.51]\), \(t(101) = -0.62\), \(p = .537\)).

As in Exp. 1, we also found behavioural asymmetries between the two detection responses (see Fig. 3.7), with ‘yes’ responses being faster (median difference of 77.12 ms) and accompanied by higher levels of confidence (mean difference of 0.10 on a 0-1 scale). Unlike in Exp. 1, here we found no evidence for a difference in metacognitive sensitivity between ‘yes’ and ‘no’ responses (mean difference of 0.02 in AUC units). No asymmetries were observed between the two discrimination responses. For a detailed statistical analysis see Appendix D.2.1.

Figure 3.7: Behavioural asymmetries in metacognitive sensitivity, response time, and overall confidence, in Exp. 2. Same conventions as in Fig. 3.3.

3.3.3.3 Reverse Correlation

Stimuli in Exp. 2 consisted of two flickering patches, each comprising 4 gray bars presented for 12 frames. Together, this summed to 96 random luminance values per trial, which we subjected to reverse correlation analysis, following the analysis procedure of Exp 2. in Zylberberg, Barttfeld, & Sigman (2012).

Discrimination decisions

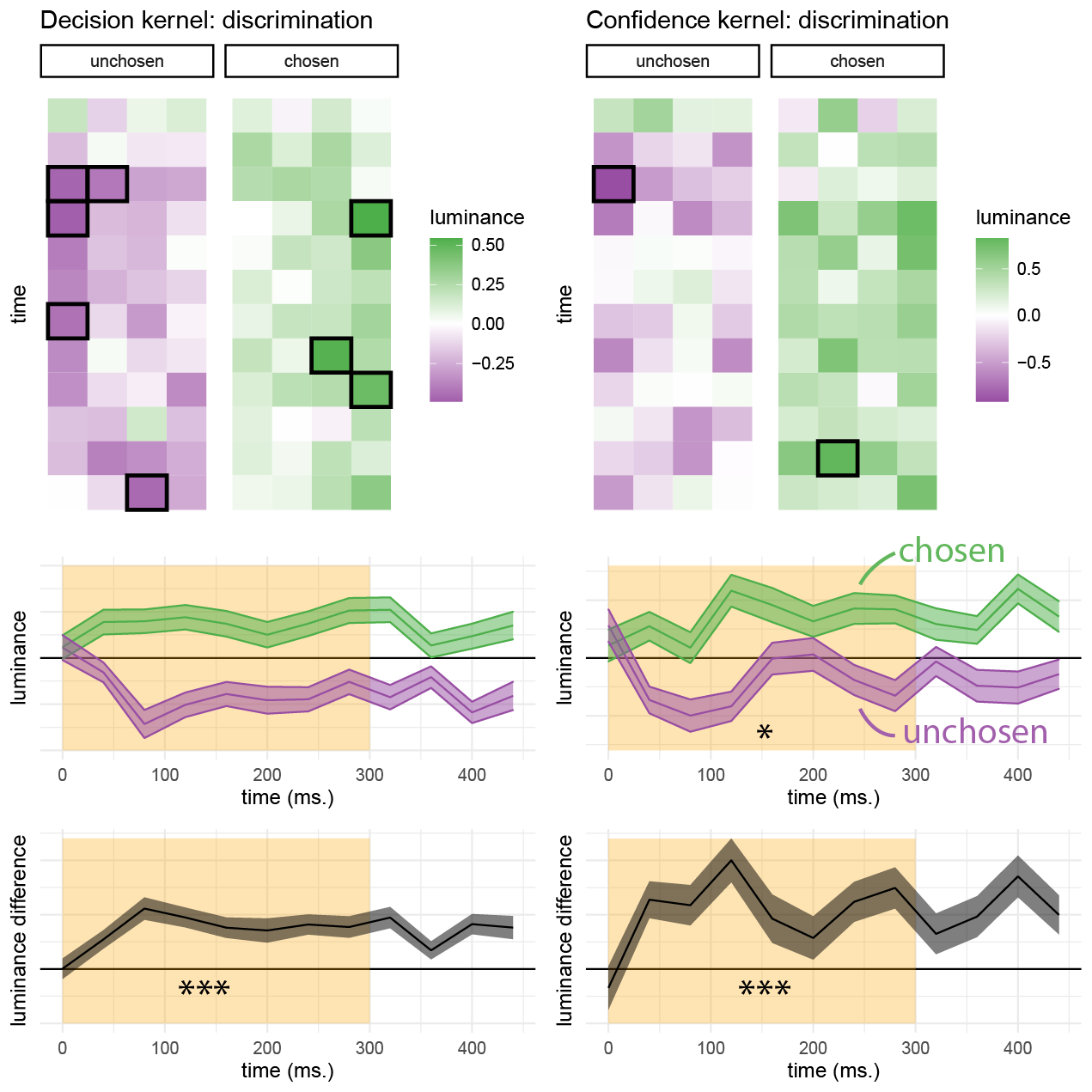

First, we asked whether random fluctuations in luminance had an effect on participants’ discrimination responses. Similar to the results obtained by Zylberberg et. al., discrimination decisions were sensitive to fluctuations in luminance during the first 300 milliseconds of the trial (\(t(101) = 10.98\), \(p < .001\); see Fig. 3.8, left panels). As per our approach to the reverse correlation analysis of Exp. 1, in order to test for decision biases we need to divide evidence based on a criterion that is independent of participants’ decision. When sorting evidence based on the location of the true signal, participants’ decisions were significantly more sensitive to fluctuations in luminance in the non-signal compared with the signal stimulus within the first 300 milliseconds of the trial (\(t(100) = -2.29\), \(p = .024\)). Importantly, this asymmetry (effectively, a negative evidence bias) is in the opposite direction to what we later find in discrimination confidence and detection decisions.

Figure 3.8: Decision and confidence discrimination kernels, Experiment 2. Upper panels: decision (left) and confidence (right) kernels for the flickering patch stimuli. Black frames signify a significant effect at the 0.05 significance level controlling for family-wise error rate across the 48 (12 timepoint x 4 positions) comparisons. middle panels: decision and confidence kernels, averaged across the four bars to yield a single timecourse for the chosen (green) and unchosen (purple) stimuli. Lower panels: subtraction of luminance timecourses for the chosen and unchosen stimuli. Same plotting conventions as Fig. 3.4.

Discrimination confidence

We observed a significant effect of luminance on confidence within the first 300 milliseconds of the stimulus (\(t(100) = 7.14\), \(p < .001\); see Fig. 3.8, right panels). Replicating Zylberberg, Barttfeld, & Sigman (2012), this effect was significantly stronger for luminance in the chosen stimulus, compared to the unchosen one (\(t(100) = 2.56\), \(p = .012\)), consistent with a positive evidence bias.

Detection decisions

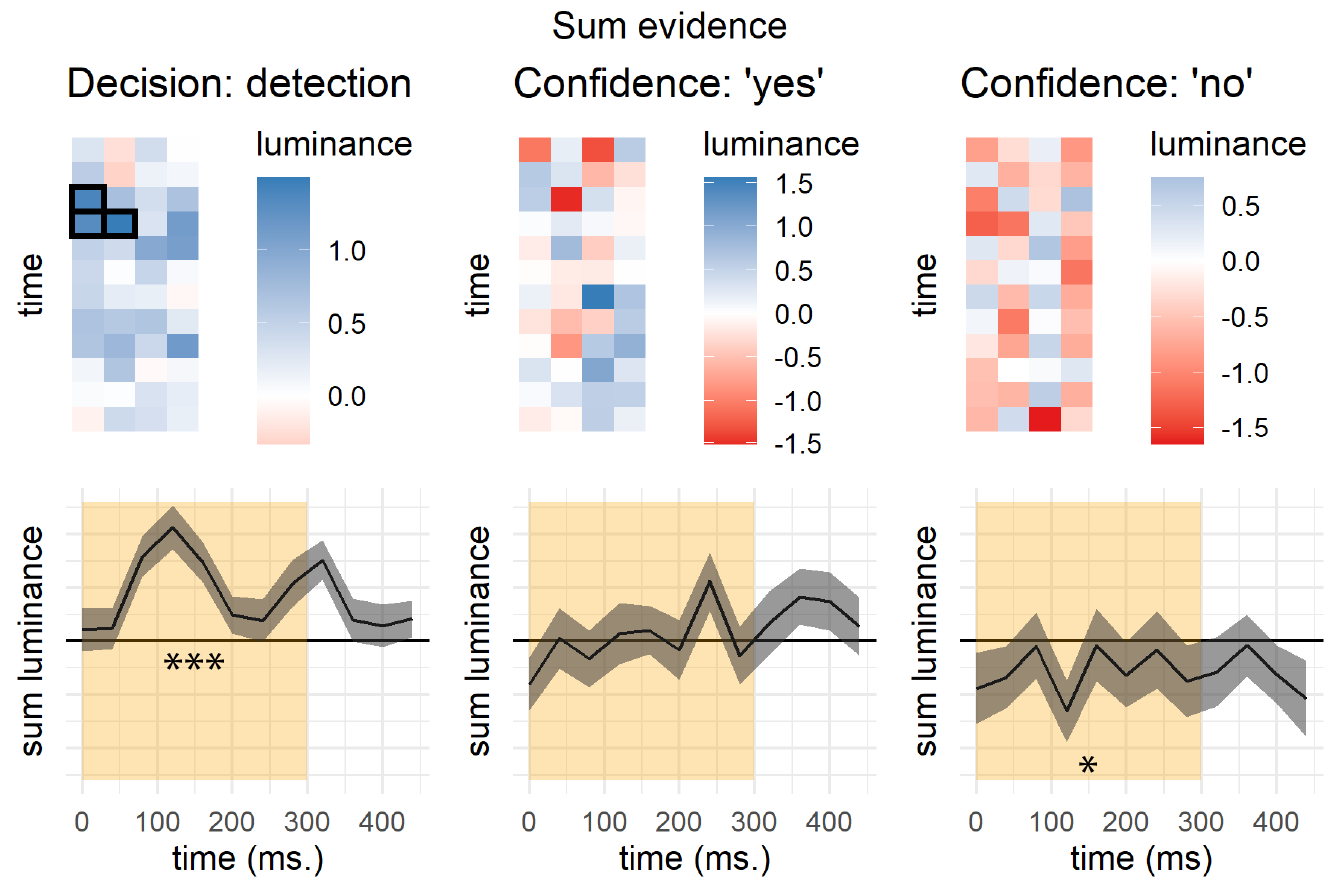

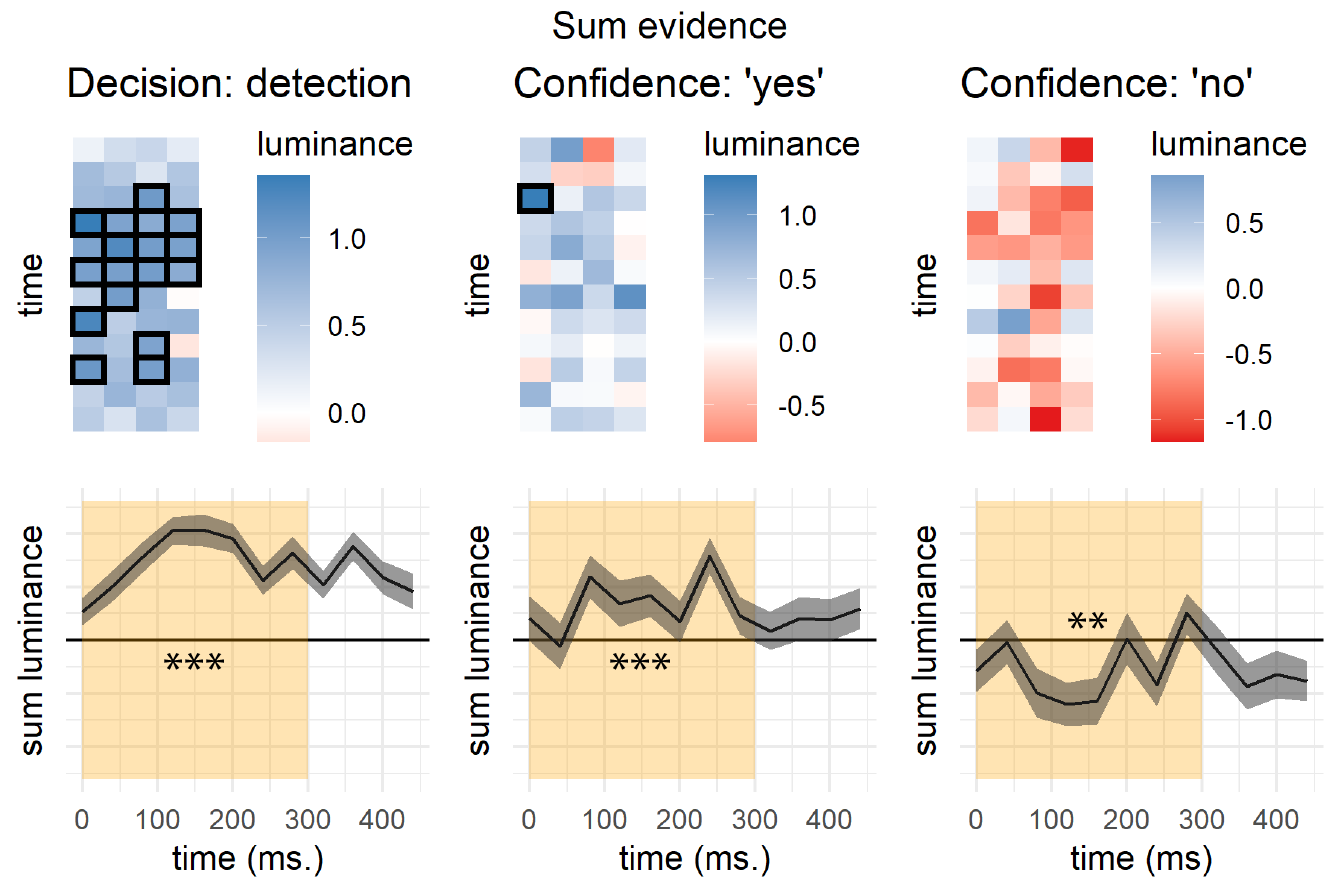

We pooled luminance values from both right and left stimuli and contrasted the resulting values as a function of detection response. Sum luminance had a significant effect on participants’ detection responses during the first 300 milliseconds (\(t(101) = 6.10\), \(p < .001\); see Fig. 3.9, left panel), suggesting that participants were sensitive to sum evidence in their detection responses, as expected from a model in which detection is rationally based on the likelihood ratio between signal presence and absence (see Fig. 3.1).

We then asked if overall luminance had an effect on decision confidence, such that participants are more confident in their ‘yes’ responses for brighter displays, and more confident in their ‘no’ responses for darker displays. Interestingly, and in contrast with our hypothesis, sum luminance had no effect on decision confidence in ‘yes’ responses (\(t(99) = -0.02\), \(p = .983\)), but had a significant negative effect on confidence in ‘no’ responses (\(t(99) = -2.43\), \(p = .017\); see Fig. 3.9, middle and right panels). However, to again anticipate our pre-registered Exp. 3, we find an effect of sum luminance on both ‘yes’ and ‘no’ responses, suggesting that this surprising absence of an effect for ‘yes’ responses is likely to be a type-2 error.

Figure 3.9: Decision and confidence detection kernels, Experiment 2. Upper panels: decision (left) and confidence (right) kernels for the flickering patch stimuli, showing the effect of sum evidence (sum luminance across both stimuli) on decisions and confidence. Black frames signify a significant effect at the 0.05 significance level controlling for family-wise error rate across the 48 (12 timepoint x 4 positions) comparisons. Lower panels: decision and confidence kernels, averaged across the four bars to yield a single timecourse for the difference in luminance effects in ‘yes’ and ‘no’ responses. Same conventions as in Fig. 3.8.

3.3.4 Detection signal trials

Figure 3.10: Decision and confidence kernels for detection signal trials, Experiment 2. Upper left: mean difference in luminance between ‘yes’ and ‘no’ responses for the target and non-target stimuli. Upper middle and right panels: mean effect of luminance on confidence in the target and non-target stimuli, in ‘yes’ and ‘no’ responses. Middle panels: the effects of luminance on decision and confidence, averaged across the four spatial locations. Lower panels: a subtraction between the effects of luminance in the target and non-target stimuli. Same conventions as Fig. 3.8

We next focused on detection signal trials. This analysis diverged from our pre-registered plan; for the pre-registered analysis, please see Appendix section D.4. In these trials, we could separate stimuli to a signal channel (the brighter, target stimulus) and a noise channel (the darker, non-target stimulus), and ask how random variability in luminance in each channel affected detection decisions and confidence. As in Exp. 1, a positive evidence bias effect in detection was apparent in the decision itself: when deciding whether one of the flickering patches was brighter, participants were more sensitive to positive noise in the brighter patch than to negative noise in the darker patch (\(t(101) = 6.10\), \(p < .001\)). Random fluctuations in luminance in the first 300 milliseconds of the trial also contributed to confidence in detection ‘yes’ responses (hit trials; \(t(99) = 5.08\), \(p < .001\)). In contrast, confidence in ‘no’ responses was negatively sensitive to the overall luminance of the display. A negative effect of luminance on confidence in ‘no’ responses was significant for the non-target stimulus (\(t(98) = -2.64\), \(p = .010\)), and marginally significant for the target stimulus (\(t(98) = -1.67\), \(p = .099\)). Consistent with the results of Exp. 1, confidence in ‘miss’ trials was independent of the contrast in luminance between the right and left stimuli (\(t(98) = 1.26\), \(p = .210\)). Importantly, for both stimuli higher confidence in these trials was associated with lower luminance values, in line with our observation that confidence in detection ‘no’ responses was based on the overall darkness of the display, rather than on relative evidence. Finally, and similar to the results of Exp. 1, detection confidence was not susceptible to a positive evidence bias (\(t(99) = -0.12\), \(p = .901\)). Exp. 3 below was designed to replicate this surprising symmetric weighting of positive and negative evidence in detection confidence (the absence of a positive evidence bias) in a highly-powered design.

3.4 Experiment 3

In Exp. 3 we aimed to replicate our findings using an experimental manipulation, in addition to employing reverse-correlation analysis to random variations between stimuli. Our pre-registered objectives (see our pre-registration document: https://osf.io/hm3fn/) were to first, replicate a positive evidence bias in discrimination, second, replicate a positive evidence bias in detection decisions, and third, replicate the absence of a positive evidence bias in detection confidence.

3.4.1 Methods

3.4.1.1 Participants

The research complied with all relevant ethical regulations, and was approved by the Research Ethics Committee of University College London (study ID number 1260/003). 173 participants (median reported age: 31; range: [18-71]) were recruited via Prolific (prolific.co), and gave their informed consent prior to their participation. They were selected based on their acceptance rate (>95%) and for being native English speakers. Following our pre-registration, we aimed to collect data until we had reached 100 included participants based on our pre-specified inclusion criteria (see https://osf.io/hm3fn/). Our final data set includes observations from 100 included participants. The entire experiment took around 20 minutes to complete. Participants were paid £2.5 for their participation, equivalent to an hourly wage of £7.5.

3.4.1.2 Experimental paradigm

Experiment 3 was identical to Experiment 2 with two changes. First, on half of the trials (high-luminance trials) the luminance of both sets of bars was increased by 2/255 for the entire duration of the display. Second, in order to increase our statistical power for detecting response-specific effects in detection, participants performed four detection blocks and two discrimination blocks. Each block comprised 56 trials. The order of blocks was [detection, discrimination, detection, discrimination, detection, detection] for all participants.

3.4.2 Results

3.4.2.1 Response accuracy

Overall proportion correct was 0.88 in the discrimination and 0.67 in the detection task. Performance for discrimination was significantly higher than for detection (\(M_d = 0.21\), 95% CI \([0.19\), \(0.22]\), \(t(97) = 29.87\), \(p < .001\)), as expected.

3.4.2.2 Overall properties of response and confidence distributions

Similar to Experiments 1 and 2, participants were more likely to respond ‘yes’ than ‘no’ in the detection task (mean proportion of ‘yes’ responses: \(M = 0.53\), 95% CI \([0.51\), \(0.54]\), \(t(97) = 3.73\), \(p < .001\)). We did not observe a consistent response bias in discrimination (mean proportion of ‘right’ responses: \(M = 0.50\), 95% CI \([0.48\), \(0.53]\), \(t(97) = 0.46\), \(p = .647\)).

As in both Experiments 1 and 2, we found behavioural asymmetries between the two detection responses, with ‘yes’ responses being faster (median difference of 71.81 ms), and accompanied by higher levels of confidence (mean difference of 0.09 on a 0-1 scale). As in Exp. 1, we find a difference in metacognitive sensitivity between ‘yes’ and ‘no’ responses (mean difference of 0.03 in AUC units). No asymmetries were observed between the two discrimination responses. For a detailed statistical analysis see Appendix D.3.1.

Figure 3.11: Behavioural asymmetries in metacognitive sensitivity, response time, and overall confidence, in Exp. 3. Same conventions as in Fig. 3.3.

3.4.2.3 Reverse correlation

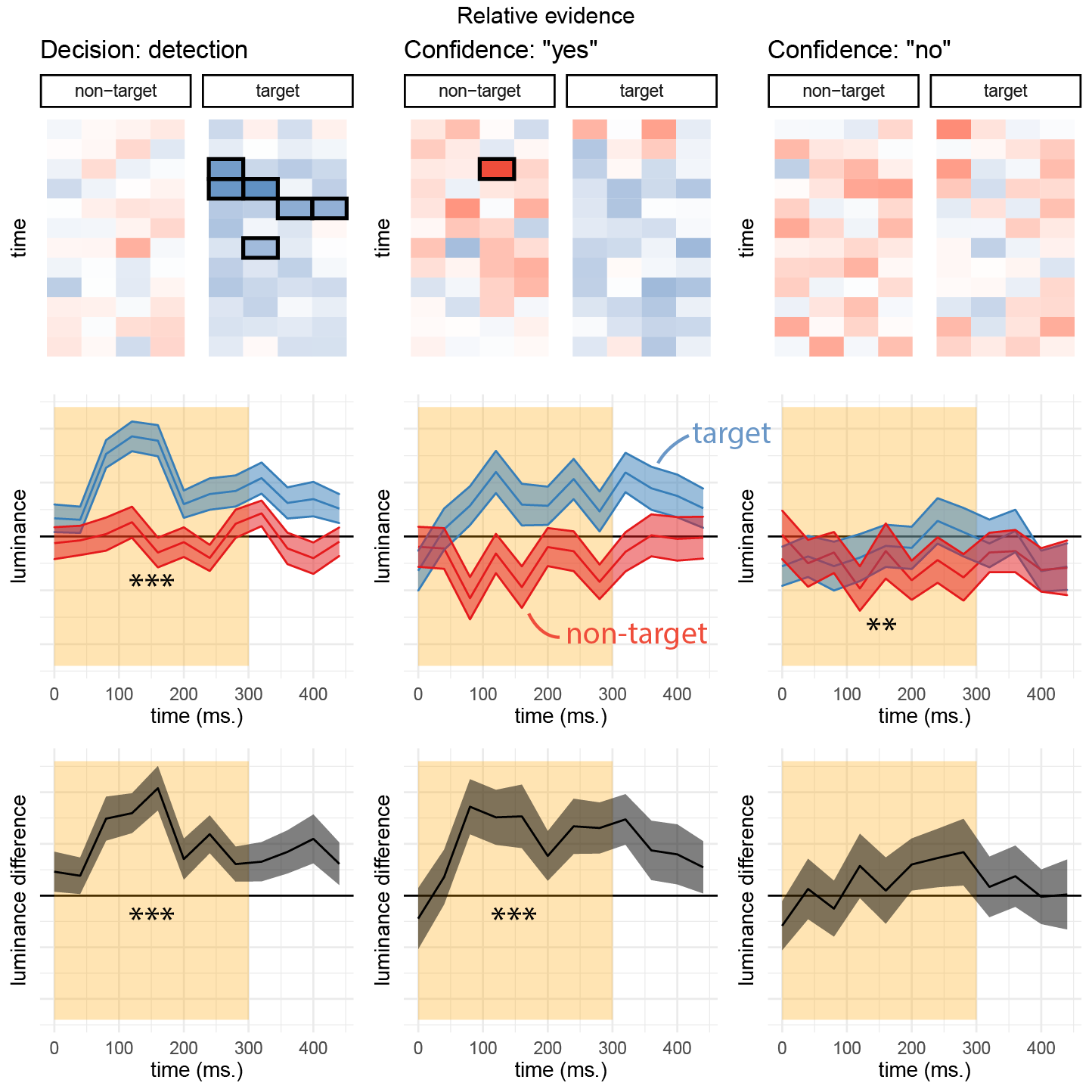

Discrimination decisions

We first focused on reverse correlation analyses that collapsed across high-luminance and standard trials, in order to replicate the same approach used in Exps. 1 and 2. When focusing on standard trials only, the results are qualitatively similar, with the exception of confidence in detection ‘no’ responses (see Appendix D.3.2). Discrimination decisions were sensitive to fluctuations in luminance during the first 300 milliseconds of the trial (\(t(97) = 12.01\), \(p < .001\); see Fig. 3.12, left panels). We found no evidence for a positive evidence bias in discrimination decisions, even when grouping evidence based on the location of the true signal rather than subjects’ decisions (\(t(97) = 0.83\), \(p = .407\)).

Discrimination confidence

Luminance within the first 300 milliseconds had a significant effect on confidence ratings (\(t(97) = 7.23\), \(p < .001\); see Fig. 3.12, right panels). A positive evidence bias in discrimination confidence was only marginally significant in this sample (\(t(97) = 1.63\), \(p = .106\)).

Figure 3.12: Decision and confidence discrimination kernels, Experiment 3. Same conventions as Fig. 3.8.

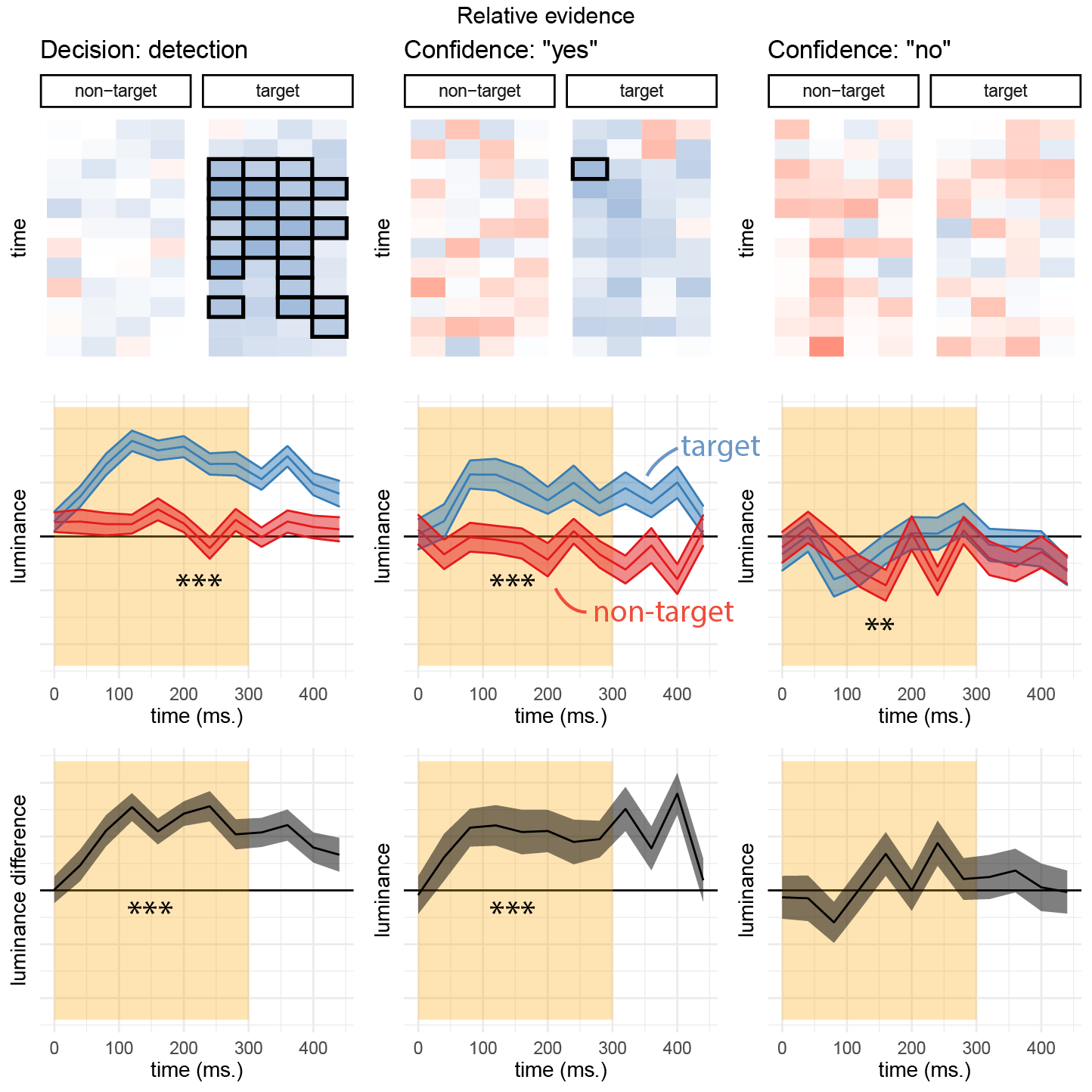

3.4.2.4 Detection

Similar to Exp. 2, sum luminance had a significant effect on participants’ detection responses during the first 300 milliseconds (\(t(97) = 10.94\), \(p < .001\); see Fig. 3.13, left panel). Recall that a surprising finding in Exp. 1 was that sum luminance on detection decisions had no effect on participants’ confidence in their judgments of stimulus presence. In contrast, in Exp. 3 sum luminance had a significant positive effect on decision confidence when reporting target presence (‘yes’ responses; \(t(97) = 3.54\), \(p = .001\)), and a significant negative effect on confidence when reporting target absence (‘no’ responses; \(t(97) = -3.04\), \(p = .003\); see Fig. 3.13, middle and right panels).

Figure 3.13: Decision and confidence detection kernels, Experiment 3. Same conventions as Fig. 3.9.

Figure 3.14: Decision and confidence kernels for detection signal trials, Experiment 3. Same conventions as Fig. 3.10.

3.4.2.5 Detection signal trials

As in Exp. 2, here we also asked how random variability in luminance in the target (brighter) and non-target (darker) channels affected detection decision and confidence. When deciding whether one of the two flickering patches was brighter than the background, participants were sensitive to positive noise in the target patch more than to negative noise in the non-target patch (\(t(97) = 10.94\), \(p < .001\)), consistent with a positive evidence bias in detection decisions and replicating findings from Exps. 1 and 2. Random fluctuations in luminance in the first 300 milliseconds of the trial also contributed to confidence in detection ‘yes’ responses (hit trials; \(t(97) = 6.07\), \(p < .001\)). Importantly, however, and in contrast to the results of Exp. 1 and 2, confidence in ‘yes’ responses was more sensitive to positive evidence than to conflicting evidence (\(t(97) = 3.49\), \(p = .001\)). Together these results are consistent with a positive evidence bias not only for detection decisions, but also for detection confidence.

Confidence in ‘miss’ trials was independent of the contrast in luminance between the right and left stimuli (\(t(96) = 0.89\), \(p = .374\)) but, as described above, confidence in ‘no’ responses was sensitive to the overall luminance of the display. This negative effect of luminance on confidence in ‘no’ responses was significant for the non-target stimulus (\(t(96) = -2.91\), \(p = .005\)), and marginally significant for the target stimulus (\(t(96) = -1.67\), \(p = .099\)). In other words, and similar to our findings in Exp. 2, for both stimuli higher confidence was associated with lower luminance values. This is again consistent with our observation that confidence in judgments about stimulus absence is based on the overall darkness of the display.

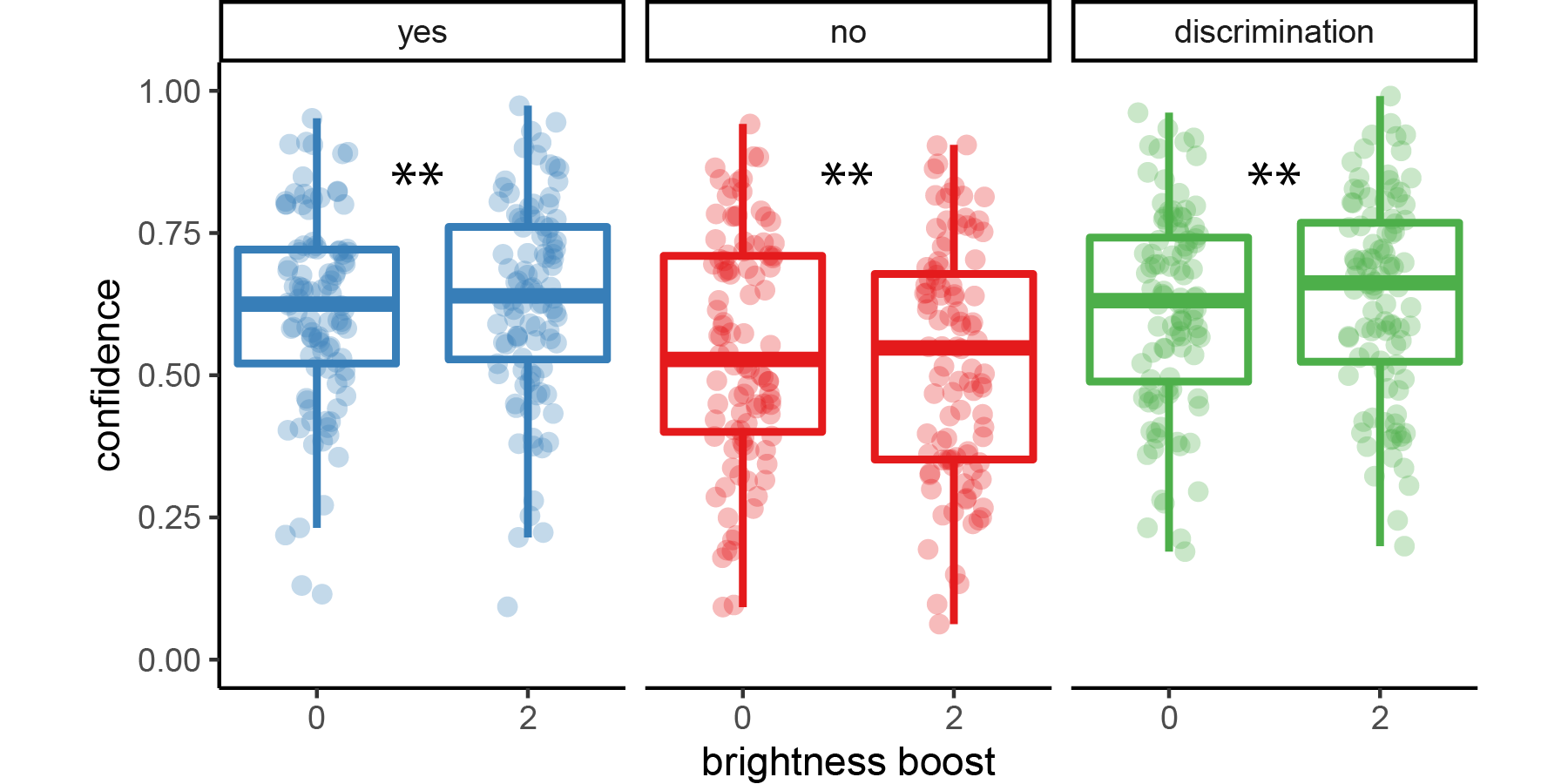

3.4.2.6 Evidence-weighting

In Experiments 1 and 2, confidence in judgments about stimulus presence was invariant to sum evidence (overall motion energy in Exp.1, sum luminance in Exp. 2). This was surprising for two reasons. First, in both cases sum motion energy did have a significant effect on detection decisions. Second, incorporating information about sum evidence into confidence in the presence of a stimulus is rational: a target stimulus is more likely to be present when both stimuli are brighter. As we document above, however, this surprising finding did not replicate in Exp. 3, where detection confidence was now sensitive to the overall brightness of the display. We contrasted the two luminance conditions as a direct experimental test of differential evidence weighting on detection decisions and confidence.

In order to increase statistical power for tests of a positive evidence bias, in Exp. 3 half of the trials consisted of slightly brighter stimuli. In detection, participants were more likely to respond ‘yes’ on these high-luminance trials (\(M = 0.09\), 95% CI \([0.07\), \(0.11]\), \(t(97) = 8.48\), \(p < .001\)). Overall luminance is a valid cue for signal presence, so relying on it for detection judgments is rational. In discrimination, participants were also more confident in high-luminance trials (\(M = 0.02\), 95% CI \([0.01\), \(0.04]\), \(t(97) = 3.28\), \(p = .001\)), replicating a positive evidence bias for discrimination confidence.

In line with the reverse correlation analysis of Exp. 3 (and in contrast to the findings of Experiments 1 and 2), participants were more confident in their ‘yes’ responses when overall luminance was higher (\(M = 0.02\), 95% CI \([0.01\), \(0.03]\), \(t(97) = 3.01\), \(p = .003\)). Our pre-registered Bayesian analysis provided strong evidence for the alternative hypothesis that detection confidence is affected by this manipulation (\(\mathrm{BF}_{\textrm{10}} = 10.90\)). Furthermore, this increase in ‘yes’ response confidence as a function of the brightness manipulation was not significantly different from that observed for discrimination confidence (\(M = -0.01\), 95% CI \([-0.03\), \(0.01]\), \(t(97) = -0.55\), \(p = .584\)).

Finally, and in line with Exp. 2, overall luminance had a significant negative effect on confidence in ‘no’ responses (\(M = -0.02\), 95% CI \([-0.03\), \(-0.01]\), \(t(97) = -3.01\), \(p = .003\)), indicating that participants were more confident in the absence of a target when overall luminance was lower.

Figure 3.15: Difference in confidence between standard and higher-luminance trials for the three response categories (detection ‘yes’ and ‘no’ responses, and discrimination responses) in Exp. 3. Box edges and central lines represent the 25, 50 and 75 quantiles. Whiskers cover data points within four inter-quartile ranges around the median. Stars represent significance in a two-sided t-test: **: p<0.01

3.5 Discussion

In three experiments, we compared the perceptual drivers of decisions and confidence ratings in discrimination and detection, matched for difficulty (Exp. 1) and signal strength (Exp. 2 and 3). In order to measure the contribution of perceptual evidence to confidence in detection and discrimination confidence ratings, we followed Zylberberg, Barttfeld, & Sigman (2012) and applied reverse correlation to noisy stimuli in perceptual decision making tasks. We fully replicated the main results of Zylberberg and colleagues: decisions and confidence were affected by perceptual evidence in the first 300 milliseconds of the trial, peaking at around 200 milliseconds. We also successfully replicated a positive evidence bias for discrimination confidence: confidence in the discrimination task was more affected by supporting than by conflicting evidence. A positive evidence bias in discrimination confidence judgments may indicate that participants adopt a detection-like disposition in their metacognitive judgments, focusing on sum evidence rather than relative evidence when rating their confidence.

In Experiments 1 and 2, detection decisions but not confidence ratings also showed a positive evidence bias: when making a detection response participants mostly ignored random fluctuations in stimulus energy that were not aligned with the true, presented signal, but these fluctuations were later taken into account when rating their confidence. Based on this surprising finding, in Experiment 3 we pre-registered an hypothesis that detection confidence should be equally sensitive to positive and negative evidence. To increase our statistical sensitivity, we doubled the number of detection trials and included a direct manipulation of positive evidence. Results from Experiment 3 provided clear evidence against the hypothesis derived from Experiments 1 and 2, and support an unequal weighting of positive and negative evidence not only for detection decisions, but also for detection confidence judgments.

Previous accounts of the positive evidence bias in discrimination confidence presented it as a heuristic that participants adopt due to cognitive constraints in the face of unreasonably vast representational spaces (Maniscalco, Peters, & Lau, 2016) or due to an asymmetric encoding of signal and noise (Miyoshi & Lau, 2020). A heuristic use of evidence in confidence ratings, but not in the decision itself, in turn implies that different processes are involved in the generation of decisions and confidence ratings, and that participants are in some sense being irrational when discarding relevant evidence that could be used in constructing confidence.

Similarly, a positive-evidence bias was recently demonstrated in an artificial neural network trained to classify hand-written digits and, in parallel, predict its classification accuracy (Webb, Miyoshi, So, & Lau, 2021). The network was trained on images varying in contrast and visual noise, and later tested on overlays of two digits, varying in contrast only. Under this training regime, the network was more confident for high-contrast images, controlling for classification accuracy. Similar to the heuristic account of Maniscalco, Peters, & Lau (2016), here also a positive evidence bias reflected the application of an inductive bias acquired in real life, or in training, to a test setting where doing so is maladaptive.

An alternative possibility is that a single, Bayes-rational model with a valid prior is governing both choice and confidence ratings, but that the form of that model is yet to be specified. For instance, one possible driver of a positive evidence bias in discrimination confidence ratings is the higher informational value of signal than noise. If the signal channel holds more information about signal identity or stimulus presence, giving more weight to information from this channel is rational. This is the case in unequal-variance SDT settings, where signal is sampled from a wider range of values than noise. As an example, if noise is sampled from a Gaussian distribution with mean 0 and variance 1 and signal from a Gaussian distribution with mean 2 and variance 9, sampling the value 7 (two standard deviations to the right of the signal distribution) is much more informative about the presence or absence of a signal than sampling the value -2 (two standard deviations to the left of the noise distribution), because the first is only likely if sampled from the signal distribution (\(\frac{p(x|signal)}{p(x|noise)}>1,000,000,000\)), but the second is likely under both distributions (\(\frac{p(x|signal)}{p(x|noise)}=1.5\)).2 Similarly, if the representation of coherent motion is more variable across trials than the representation of random motion, participants would be rational to give more weight to evidence for coherent motion in one channel than evidence for its absence in the other channel.

Higher variability in the representation of signal is often built into the experiment itself. For example, in our Exp. 1, following Zylberberg, Barttfeld, & Sigman (2012), the number of coherently moving dots was itself randomly determined, sampled from a Gaussian distribution once every four frames. This means that there were two sources of variability for the true direction of motion (variability in the direction of randomly moving dots and variability in the number of coherently moving dots), but only one source of variability for the opposite direction (variability in the direction of randomly moving dots). But even when signal is not made more variable by design, the representation of signal is expected to be more variable due to the Weber-Fechner law (Fechner & Adler, 1860) and the coupling between firing rate mean and variability implied by a Poisson form of neuronal firing rates.

To obtain qualitative predictions for such effects, we simulated a stimulus-dependent noise model (full simulation details, including source code are available in appendix D.5). To model the unequal variance nature of the perception of signal and noise, perceptual noise was sampled from a normal distribution with mean 0 and a standard deviation proportional to the exponent of the sensory sample (\(x'=x+\epsilon; \epsilon \sim \mathcal{N}(0,2^x)\)). We chose to use the exponent of the sensory sample in order to have positive values only for the standard deviation, but qualitatively similar results are obtained for a linear mapping from sensory samples to sensory noise. A Bayes-rational agent had full knowledge of this generative model for extracting a Log Likelihood Ratio in the process of making a decision and rating their confidence.

This simulation gave rise to a pronounced positive evidence bias in discrimination confidence ratings and in detection decisions (see Fig. 3.16). The agent was more sensitive to variations in the signal channel both for deciding whether a signal was present or not, when rating its confidence in discriminating between two stimulus classes, and when rating its confidence in decisions about stimulus presence. This is in line with our empirical findings. Importantly, in this model decision and confidence ratings are the output of the same Bayes-rational process applied to a situation where perceptual noise scales with signal strength, and do not reflect any suboptimality in evidence weighting.

Figure 3.16: Model predictions for a stimulus-dependent noise model. Sensory noise is higher for stronger sensory samples. For each point on the grid, we simulated 200 trials by sampling a sensory sample and extracting a decision and a confidence rating according to the log likelihood ratio of the two hypotheses. Left: two example sensory samples, marked with an X, have the same relative evidence, but absolute evidence is higher for the sample on the upper right. This sample was given a higher-confidence rating, consistent with a positive evidence bias in behaviour, here emerging from a Bayes-rational response and confidence strategy.

However, a stimulus-dependent-noise model makes predictions not only for the effect of sum evidence on discrimination and detection confidence ratings, but also for the effect of sum evidence on decision performance (\(d'\)). Specifically, if stronger stimuli are also noisier, sum evidence should have a positive effect on confidence, but a negative effect on response accuracy. In contrast with this prediction, in Exp. 3, an increase to the luminance of both stimuli had no effect on accuracy (\(M = 0.01\), 95% CI \([-0.01\), \(0.03]\), \(t(97) = 1.06\), \(p = .294\)), but boosted discrimination confidence nonetheless. Moreover, the effect of overall luminance on confidence was positively correlated with its effect on decision confidence (\(r = .32\), 95% CI \([.13\), \(.49]\), \(t(96) = 3.33\), \(p = .001\)) and not negatively correlated as would be expected if degraded accuracy and higher confidence were both driven by higher sum evidence.

Goal-contingent effects also weigh against a Bayes-rational account of the positive evidence bias in discrimination confidence. In a recent study, Sepulveda et al. (2020) presented participants with pairs of dot arrays and asked them to choose the array with more white dots. Participants were more confident when both arrays included more dots, replicating a positive evidence bias. Critically, the experiment also included a second condition, in which participants were asked to choose the array with fewer white dots. In this condition, confidence was higher when both arrays included fewer dots: an effect opposite to the positive evidence bias. This effect was also related to participants’ information sampling behaviour in the two conditions: they spent more time fixating their gaze at the array containing more dots under typical instructions, but the opposite was the case when they were instructed to select the array with fewer dots. In a Bayes-rational model, evidence weighting should be identical for these two equivalent ways of framing the task instructions. This finding is also inconsistent with heuristic models that are based at variance differences between the encoding of signal and noise, as those are not expected to change as task instructions change.

Instead, our findings, as well as those of Sepulveda et al. (2020), are generally in line with a heuristic account of positive evidence bias that posits limits on cognitive resources when coping with high-dimensional representations (Maniscalco, Peters, & Lau, 2016). Basing confidence on positive evidence in such a world frees agents from the need to consider an infinite number of alternative hypotheses. Similarly, the findings of Sepulveda et al. (2020) can be accounted for by a model in which participants flexibly allocate attention to the choice-consistent dimension of evidence (more vs. fewer dots), while ignoring other dimensions. What constitutes positive evidence is then rationally dependent on an agent’s specific goals and attentional set at the time of performing a task.

We reused the original Matlab code that was used for Exp. 1 in Zylberberg et. al. (2012), kindly shared by Ariel Zylberberg. ↩︎

In other words, the expected log likelihood ratio when sampling from the signal distribution is higher (in absolute terms) than when sampling from the noise distribution, or \(D_{KL}(signal||noise)>D_{KL}(noise||signal)\).↩︎